- ubuntu12.04环境下使用kvm ioctl接口实现最简单的虚拟机

- Ubuntu 通过无线网络安装Ubuntu Server启动系统后连接无线网络的方法

- 在Ubuntu上搭建网桥的方法

- ubuntu 虚拟机上网方式及相关配置详解

CFSDN坚持开源创造价值,我们致力于搭建一个资源共享平台,让每一个IT人在这里找到属于你的精彩世界.

这篇CFSDN的博客文章Python爬取当当、京东、亚马逊图书信息代码实例由作者收集整理,如果你对这篇文章有兴趣,记得点赞哟.

注:1.本程序采用MSSQLserver数据库存储,请运行程序前手动修改程序开头处的数据库链接信息 。

2.需要bs4、requests、pymssql库支持 。

3.支持多线程 。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

|

from

bs4

import

BeautifulSoup

import

re,requests,pymysql,threading,os,traceback

try

:

conn

=

pymysql.connect(host

=

'127.0.0.1'

, port

=

3306

, user

=

'root'

, passwd

=

'root'

, db

=

'book'

,charset

=

"utf8"

)

cursor

=

conn.cursor()

except

:

print

(

'\n错误:数据库连接失败'

)

#返回指定页面的html信息

def

getHTMLText(url):

try

:

headers

=

{

'User-Agent'

:

'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36'

}

r

=

requests.get(url,headers

=

headers)

r.raise_for_status()

r.encoding

=

r.apparent_encoding

return

r.text

except

:

return

''

#返回指定url的Soup对象

def

getSoupObject(url):

try

:

html

=

getHTMLText(url)

soup

=

BeautifulSoup(html,

'html.parser'

)

return

soup

except

:

return

''

#获取该关键字在图书网站上的总页数

def

getPageLength(webSiteName,url):

try

:

soup

=

getSoupObject(url)

if

webSiteName

=

=

'DangDang'

:

a

=

soup(

'a'

,{

'name'

:

'bottom-page-turn'

})

return

a[

-

1

].string

elif

webSiteName

=

=

'Amazon'

:

a

=

soup(

'span'

,{

'class'

:

'pagnDisabled'

})

return

a[

-

1

].string

except

:

print

(

'\n错误:获取{}总页数时出错...'

.

format

(webSiteName))

return

-

1

class

DangDangThread(threading.Thread):

def

__init__(

self

,keyword):

threading.Thread.__init__(

self

)

self

.keyword

=

keyword

def

run(

self

):

print

(

'\n提示:开始爬取当当网数据...'

)

count

=

1

length

=

getPageLength(

'DangDang'

,

'http://search.dangdang.com/?key={}'

.

format

(

self

.keyword))

#总页数

tableName

=

'db_{}_dangdang'

.

format

(

self

.keyword)

try

:

print

(

'\n提示:正在创建DangDang表...'

)

cursor.execute(

'create table {} (id int ,title text,prNow text,prPre text,link text)'

.

format

(tableName))

print

(

'\n提示:开始爬取当当网页面...'

)

for

i

in

range

(

1

,

int

(length)):

url

=

'http://search.dangdang.com/?key={}&page_index={}'

.

format

(

self

.keyword,i)

soup

=

getSoupObject(url)

lis

=

soup(

'li'

,{

'class'

:re.

compile

(r

'line'

),

'id'

:re.

compile

(r

'p'

)})

for

li

in

lis:

a

=

li.find_all(

'a'

,{

'name'

:

'itemlist-title'

,

'dd_name'

:

'单品标题'

})

pn

=

li.find_all(

'span'

,{

'class'

:

'search_now_price'

})

pp

=

li.find_all(

'span'

,{

'class'

:

'search_pre_price'

})

if

not

len

(a)

=

=

0

:

link

=

a[

0

].attrs[

'href'

]

title

=

a[

0

].attrs[

'title'

].strip()

else

:

link

=

'NULL'

title

=

'NULL'

if

not

len

(pn)

=

=

0

:

prNow

=

pn[

0

].string

else

:

prNow

=

'NULL'

if

not

len

(pp)

=

=

0

:

prPre

=

pp[

0

].string

else

:

prPre

=

'NULL'

sql

=

"insert into {} (id,title,prNow,prPre,link) values ({},'{}','{}','{}','{}')"

.

format

(tableName,count,title,prNow,prPre,link)

cursor.execute(sql)

print

(

'\r提示:正在存入当当数据,当前处理id:{}'

.

format

(count),end

=

'')

count

+

=

1

conn.commit()

except

:

pass

class

AmazonThread(threading.Thread):

def

__init__(

self

,keyword):

threading.Thread.__init__(

self

)

self

.keyword

=

keyword

def

run(

self

):

print

(

'\n提示:开始爬取亚马逊数据...'

)

count

=

1

length

=

getPageLength(

'Amazon'

,

'https://www.amazon.cn/s/keywords={}'

.

format

(

self

.keyword))

#总页数

tableName

=

'db_{}_amazon'

.

format

(

self

.keyword)

try

:

print

(

'\n提示:正在创建Amazon表...'

)

cursor.execute(

'create table {} (id int ,title text,prNow text,link text)'

.

format

(tableName))

print

(

'\n提示:开始爬取亚马逊页面...'

)

for

i

in

range

(

1

,

int

(length)):

url

=

'https://www.amazon.cn/s/keywords={}&page={}'

.

format

(

self

.keyword,i)

soup

=

getSoupObject(url)

lis

=

soup(

'li'

,{

'id'

:re.

compile

(r

'result_'

)})

for

li

in

lis:

a

=

li.find_all(

'a'

,{

'class'

:

'a-link-normal s-access-detail-page a-text-normal'

})

pn

=

li.find_all(

'span'

,{

'class'

:

'a-size-base a-color-price s-price a-text-bold'

})

if

not

len

(a)

=

=

0

:

link

=

a[

0

].attrs[

'href'

]

title

=

a[

0

].attrs[

'title'

].strip()

else

:

link

=

'NULL'

title

=

'NULL'

if

not

len

(pn)

=

=

0

:

prNow

=

pn[

0

].string

else

:

prNow

=

'NULL'

sql

=

"insert into {} (id,title,prNow,link) values ({},'{}','{}','{}')"

.

format

(tableName,count,title,prNow,link)

cursor.execute(sql)

print

(

'\r提示:正在存入亚马逊数据,当前处理id:{}'

.

format

(count),end

=

'')

count

+

=

1

conn.commit()

except

:

pass

class

JDThread(threading.Thread):

def

__init__(

self

,keyword):

threading.Thread.__init__(

self

)

self

.keyword

=

keyword

def

run(

self

):

print

(

'\n提示:开始爬取京东数据...'

)

count

=

1

tableName

=

'db_{}_jd'

.

format

(

self

.keyword)

try

:

print

(

'\n提示:正在创建JD表...'

)

cursor.execute(

'create table {} (id int,title text,prNow text,link text)'

.

format

(tableName))

print

(

'\n提示:开始爬取京东页面...'

)

for

i

in

range

(

1

,

100

):

url

=

'https://search.jd.com/Search?keyword={}&page={}'

.

format

(

self

.keyword,i)

soup

=

getSoupObject(url)

lis

=

soup(

'li'

,{

'class'

:

'gl-item'

})

for

li

in

lis:

a

=

li.find_all(

'div'

,{

'class'

:

'p-name'

})

pn

=

li.find_all(

'div'

,{

'class'

:

'p-price'

})[

0

].find_all(

'i'

)

if

not

len

(a)

=

=

0

:

link

=

'http:'

+

a[

0

].find_all(

'a'

)[

0

].attrs[

'href'

]

title

=

a[

0

].find_all(

'em'

)[

0

].get_text()

else

:

link

=

'NULL'

title

=

'NULL'

if

(

len

(link) >

128

):

link

=

'TooLong'

if

not

len

(pn)

=

=

0

:

prNow

=

'¥'

+

pn[

0

].string

else

:

prNow

=

'NULL'

sql

=

"insert into {} (id,title,prNow,link) values ({},'{}','{}','{}')"

.

format

(tableName,count,title,prNow,link)

cursor.execute(sql)

print

(

'\r提示:正在存入京东网数据,当前处理id:{}'

.

format

(count),end

=

'')

count

+

=

1

conn.commit()

except

:

pass

def

closeDB():

global

conn,cursor

conn.close()

cursor.close()

def

main():

print

(

'提示:使用本程序,请手动创建空数据库:Book,并修改本程序开头的数据库连接语句'

)

keyword

=

input

(

"\n提示:请输入要爬取的关键字:"

)

dangdangThread

=

DangDangThread(keyword)

amazonThread

=

AmazonThread(keyword)

jdThread

=

JDThread(keyword)

dangdangThread.start()

amazonThread.start()

jdThread.start()

dangdangThread.join()

amazonThread.join()

jdThread.join()

closeDB()

print

(

'\n爬取已经结束,即将关闭....'

)

os.system(

'pause'

)

main()

|

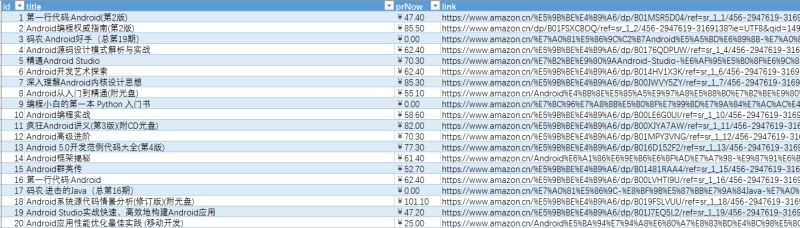

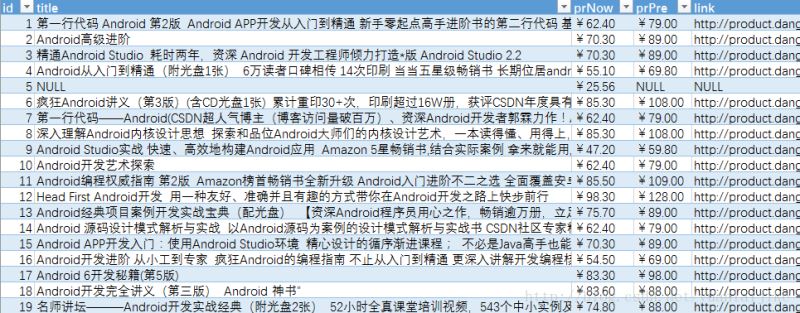

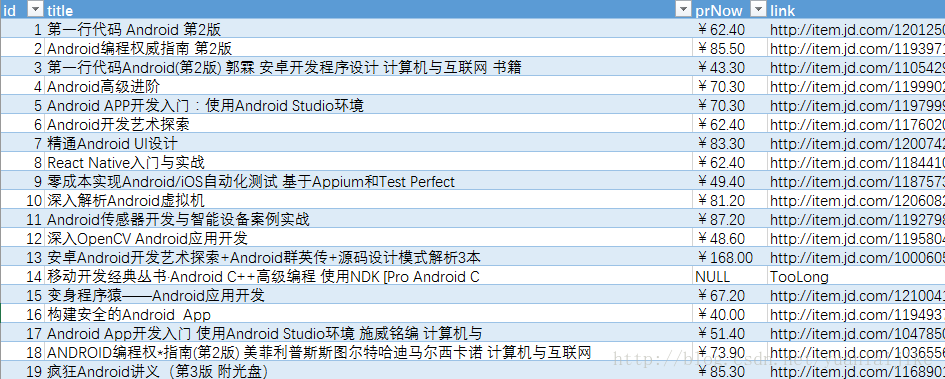

示例截图:

关键词:Android下的部分运行结果(以导出至Excel) 。

总结 。

以上就是本文关于Python爬取当当、京东、亚马逊图书信息代码实例的全部内容,希望对大家有所帮助。如有不足之处,欢迎留言指出。感谢朋友们对本站的支持! 。

原文链接:http://blog.csdn.net/yuanlaijike/article/details/65936197 。

最后此篇关于Python爬取当当、京东、亚马逊图书信息代码实例的文章就讲到这里了,如果你想了解更多关于Python爬取当当、京东、亚马逊图书信息代码实例的内容请搜索CFSDN的文章或继续浏览相关文章,希望大家以后支持我的博客! 。

我正在处理一组标记为 160 个组的 173k 点。我想通过合并最接近的(到 9 或 10 个组)来减少组/集群的数量。我搜索过 sklearn 或类似的库,但没有成功。 我猜它只是通过 knn 聚类

我有一个扁平数字列表,这些数字逻辑上以 3 为一组,其中每个三元组是 (number, __ignored, flag[0 or 1]),例如: [7,56,1, 8,0,0, 2,0,0, 6,1,

我正在使用 pipenv 来管理我的包。我想编写一个 python 脚本来调用另一个使用不同虚拟环境(VE)的 python 脚本。 如何运行使用 VE1 的 python 脚本 1 并调用另一个 p

假设我有一个文件 script.py 位于 path = "foo/bar/script.py"。我正在寻找一种在 Python 中通过函数 execute_script() 从我的主要 Python

这听起来像是谜语或笑话,但实际上我还没有找到这个问题的答案。 问题到底是什么? 我想运行 2 个脚本。在第一个脚本中,我调用另一个脚本,但我希望它们继续并行,而不是在两个单独的线程中。主要是我不希望第

我有一个带有 python 2.5.5 的软件。我想发送一个命令,该命令将在 python 2.7.5 中启动一个脚本,然后继续执行该脚本。 我试过用 #!python2.7.5 和http://re

我在 python 命令行(使用 python 2.7)中,并尝试运行 Python 脚本。我的操作系统是 Windows 7。我已将我的目录设置为包含我所有脚本的文件夹,使用: os.chdir("

剧透:部分解决(见最后)。 以下是使用 Python 嵌入的代码示例: #include int main(int argc, char** argv) { Py_SetPythonHome

假设我有以下列表,对应于及时的股票价格: prices = [1, 3, 7, 10, 9, 8, 5, 3, 6, 8, 12, 9, 6, 10, 13, 8, 4, 11] 我想确定以下总体上最

所以我试图在选择某个单选按钮时更改此框架的背景。 我的框架位于一个类中,并且单选按钮的功能位于该类之外。 (这样我就可以在所有其他框架上调用它们。) 问题是每当我选择单选按钮时都会出现以下错误: co

我正在尝试将字符串与 python 中的正则表达式进行比较,如下所示, #!/usr/bin/env python3 import re str1 = "Expecting property name

考虑以下原型(prototype) Boost.Python 模块,该模块从单独的 C++ 头文件中引入类“D”。 /* file: a/b.cpp */ BOOST_PYTHON_MODULE(c)

如何编写一个程序来“识别函数调用的行号?” python 检查模块提供了定位行号的选项,但是, def di(): return inspect.currentframe().f_back.f_l

我已经使用 macports 安装了 Python 2.7,并且由于我的 $PATH 变量,这就是我输入 $ python 时得到的变量。然而,virtualenv 默认使用 Python 2.6,除

我只想问如何加快 python 上的 re.search 速度。 我有一个很长的字符串行,长度为 176861(即带有一些符号的字母数字字符),我使用此函数测试了该行以进行研究: def getExe

list1= [u'%app%%General%%Council%', u'%people%', u'%people%%Regional%%Council%%Mandate%', u'%ppp%%Ge

这个问题在这里已经有了答案: Is it Pythonic to use list comprehensions for just side effects? (7 个答案) 关闭 4 个月前。 告

我想用 Python 将两个列表组合成一个列表,方法如下: a = [1,1,1,2,2,2,3,3,3,3] b= ["Sun", "is", "bright", "June","and" ,"Ju

我正在运行带有最新 Boost 发行版 (1.55.0) 的 Mac OS X 10.8.4 (Darwin 12.4.0)。我正在按照说明 here构建包含在我的发行版中的教程 Boost-Pyth

学习 Python,我正在尝试制作一个没有任何第 3 方库的网络抓取工具,这样过程对我来说并没有简化,而且我知道我在做什么。我浏览了一些在线资源,但所有这些都让我对某些事情感到困惑。 html 看起来

我是一名优秀的程序员,十分优秀!