- ubuntu12.04环境下使用kvm ioctl接口实现最简单的虚拟机

- Ubuntu 通过无线网络安装Ubuntu Server启动系统后连接无线网络的方法

- 在Ubuntu上搭建网桥的方法

- ubuntu 虚拟机上网方式及相关配置详解

CFSDN坚持开源创造价值,我们致力于搭建一个资源共享平台,让每一个IT人在这里找到属于你的精彩世界.

这篇CFSDN的博客文章Pytorch DataLoader shuffle验证方式由作者收集整理,如果你对这篇文章有兴趣,记得点赞哟.

shuffle = False时,不打乱数据顺序 。

shuffle = True,随机打乱 。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

import

numpy as np

import

h5py

import

torch

from

torch.utils.data

import

DataLoader, Dataset

h5f

=

h5py.

File

(

'train.h5'

,

'w'

);

data1

=

np.array([[

1

,

2

,

3

],

[

2

,

5

,

6

],

[

3

,

5

,

6

],

[

4

,

5

,

6

]])

data2

=

np.array([[

1

,

1

,

1

],

[

1

,

2

,

6

],

[

1

,

3

,

6

],

[

1

,

4

,

6

]])

h5f.create_dataset(

str

(

'data'

), data

=

data1)

h5f.create_dataset(

str

(

'label'

), data

=

data2)

class

Dataset(Dataset):

def

__init__(

self

):

h5f

=

h5py.

File

(

'train.h5'

,

'r'

)

self

.data

=

h5f[

'data'

]

self

.label

=

h5f[

'label'

]

def

__getitem__(

self

, index):

data

=

torch.from_numpy(

self

.data[index])

label

=

torch.from_numpy(

self

.label[index])

return

data, label

def

__len__(

self

):

assert

self

.data.shape[

0

]

=

=

self

.label.shape[

0

],

"wrong data length"

return

self

.data.shape[

0

]

dataset_train

=

Dataset()

loader_train

=

DataLoader(dataset

=

dataset_train,

batch_size

=

2

,

shuffle

=

True

)

for

i, data

in

enumerate

(loader_train):

train_data, label

=

data

print

(train_data)

|

我一开始是对数据扩增这一块有疑问, 只看到了数据变换(torchvisiom.transforms),但是没看到数据扩增, 后来搞明白了, 数据扩增在pytorch指的是torchvisiom.transforms + torch.utils.data.DataLoader+多个epoch共同作用下完成的.

数据变换共有以下内容 。

|

1

2

3

4

5

|

composed

=

transforms.Compose([transforms.Resize((

448

,

448

)),

# resize

transforms.RandomCrop(

300

),

# random crop

transforms.ToTensor(),

transforms.Normalize(mean

=

[

0.5

,

0.5

,

0.5

],

# normalize

std

=

[

0.5

,

0.5

,

0.5

])])

|

简单的数据读取类, 进返回PIL格式的image

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

class

MyDataset(data.Dataset):

def

__init__(

self

, labels_file, root_dir, transform

=

None

):

with

open

(labels_file) as csvfile:

self

.labels_file

=

list

(csv.reader(csvfile))

self

.root_dir

=

root_dir

self

.transform

=

transform

def

__len__(

self

):

return

len

(

self

.labels_file)

def

__getitem__(

self

, idx):

im_name

=

os.path.join(root_dir,

self

.labels_file[idx][

0

])

im

=

Image.

open

(im_name)

if

self

.transform:

im

=

self

.transform(im)

return

im

|

下面是主程序 。

|

1

2

3

4

5

6

7

8

9

10

11

|

labels_file

=

"F:/test_temp/labels.csv"

root_dir

=

"F:/test_temp"

dataset_transform

=

MyDataset(labels_file, root_dir, transform

=

composed)

dataloader

=

data.DataLoader(dataset_transform, batch_size

=

1

, shuffle

=

False

)

"""原始数据集共3张图片, 以batch_size=1, epoch为2 展示所有图片(共6张) """

for

eopch

in

range

(

2

):

plt.figure(figsize

=

(

6

,

6

))

for

ind, i

in

enumerate

(dataloader):

a

=

i[

0

, :, :, :].numpy().transpose((

1

,

2

,

0

))

plt.subplot(

1

,

3

, ind

+

1

)

plt.imshow(a)

|

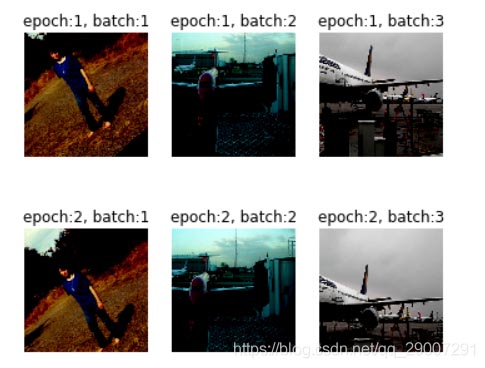

从上述图片总可以看到, 在每个eopch阶段实际上是对原始图片重新使用了transform, , 这就造就了数据的扩增 。

以上为个人经验,希望能给大家一个参考,也希望大家多多支持我.

原文链接:https://blog.csdn.net/qq_35752161/article/details/110875040 。

最后此篇关于Pytorch DataLoader shuffle验证方式的文章就讲到这里了,如果你想了解更多关于Pytorch DataLoader shuffle验证方式的内容请搜索CFSDN的文章或继续浏览相关文章,希望大家以后支持我的博客! 。

我一直在阅读有关汇编函数的内容,但对于是使用进入和退出还是仅使用调用/返回指令来快速执行,我感到很困惑。一种方式快而另一种方式更小吗?例如,在不内联函数的情况下,在汇编中执行此操作的最快(stdcal

我正在处理一个元组列表,如下所示: res = [('stori', 'JJ'), ('man', 'NN'), ('unnatur', 'JJ'), ('feel', 'NN'), ('pig',

最近我一直在做很多网络或 IO 绑定(bind)操作,使用线程有助于加快代码速度。我注意到我一直在一遍又一遍地编写这样的代码: threads = [] for machine, user, data

假设我有一个名为 user_stats 的资源,其中包含用户拥有的帖子、评论、喜欢和关注者的数量。是否有一种 RESTful 方式只询问该统计数据的一部分(即,对于 user_stats/3,请告诉我

我有一个简单的 api,它的工作原理是这样的: 用户创建一个请求 ( POST /requests ) 另一个用户检索所有请求 ( GET /requests ) 然后向请求添加报价 ( POST /

考虑以下 CDK Python 中的示例(对于这个问题,不需要 AWS 知识,这应该对基本上任何构建器模式都有效,我只是在这个示例中使用 CDK,因为我使用这个库遇到了这个问题。): from aws

Scala 中管理对象池的首选方法是什么? 我需要单线程创建和删除大规模对象(不需要同步)。在 C++ 中,我使用了静态对象数组。 在 Scala 中处理它的惯用和有效方法是什么? 最佳答案 我会把它

我有一个带有一些内置方法的类。这是该类的抽象示例: class Foo: def __init__(self): self.a = 0 self.b = 0

返回和检查方法执行的 Pythonic 方式 我目前在 python 代码中使用 golang 编码风格,决定移动 pythonic 方式 例子: import sys from typing imp

我正在开发一个 RESTful API。其中一个 URL 允许调用者通过 id 请求特定人员的记录。 返回该 id 不存在的记录的常规值是什么?服务器是否应该发回一个空对象或者一个 404,或者其他什

我正在使用 pathlib.Path() 检查文件是否存在,并使用 rasterio 将其作为图像打开. filename = pathlib.Path("./my_file-name.tif") 但

我正在寻找一种 Pythonic 方式来从列表和字典创建嵌套字典。以下两个语句产生相同的结果: a = [3, 4] b = {'a': 1, 'b': 2} c = dict(zip(b, a))

我有一个正在操裁剪理设备的脚本。设备有时会发生物理故障,当它发生时,我想重置设备并继续执行脚本。我有这个: while True: do_device_control() device

做组合别名的最pythonic和正确的方法是什么? 这是一个假设的场景: class House: def cleanup(self, arg1, arg2, kwarg1=False):

我正在开发一个小型客户端服务器程序来收集订单。我想以“REST(ful)方式”来做到这一点。 我想做的是: 收集所有订单行(产品和数量)并将完整订单发送到服务器 目前我看到有两种选择: 将每个订单行发

我知道在 Groovy 中您可以使用字符串调用类/对象上的方法。例如: Foo."get"(1) /* or */ String meth = "get" Foo."$meth"(1) 有没有办法

在 ECMAScript6 中,您可以使用扩展运算符来解构这样的对象 const {a, ...rest} = obj; 它将 obj 浅拷贝到 rest,不带属性 a。 有没有一种干净的方法可以在

我有几个函数返回数字或None。我希望我的包装函数返回第一个不是 None 的结果。除了下面的方法之外,还有其他方法吗? def func1(): return None def func2(

假设我想设计一个 REST api 来讨论歌曲、专辑和艺术家(实际上我就是这样做的,就像我之前的 1312414 个人一样)。 歌曲资源始终与其所属专辑相关联。相反,专辑资源与其包含的所有歌曲相关联。

这是我认为必须经常出现的问题,但我一直无法找到一个好的解决方案。假设我有一个函数,它可以作为参数传递一个开放资源(如文件或数据库连接对象),或者需要自己创建一个。如果函数需要自己打开文件,最佳实践通常

我是一名优秀的程序员,十分优秀!