- ubuntu12.04环境下使用kvm ioctl接口实现最简单的虚拟机

- Ubuntu 通过无线网络安装Ubuntu Server启动系统后连接无线网络的方法

- 在Ubuntu上搭建网桥的方法

- ubuntu 虚拟机上网方式及相关配置详解

CFSDN坚持开源创造价值,我们致力于搭建一个资源共享平台,让每一个IT人在这里找到属于你的精彩世界.

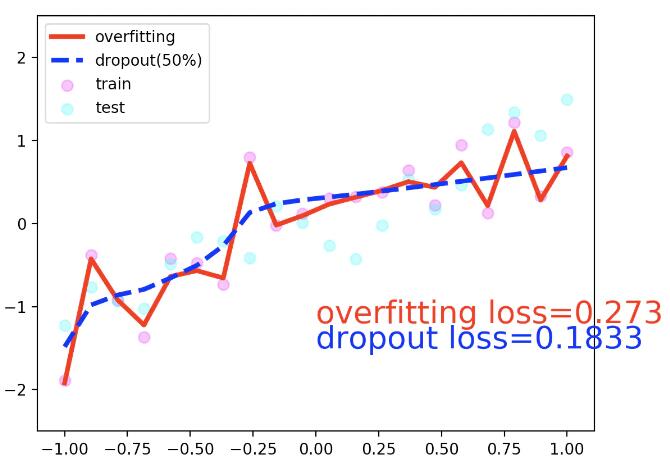

这篇CFSDN的博客文章pytorch Dropout过拟合的操作由作者收集整理,如果你对这篇文章有兴趣,记得点赞哟.

import torchfrom torch.autograd import Variableimport matplotlib.pyplot as plttorch.manual_seed(1)N_SAMPLES = 20N_HIDDEN = 300# training datax = torch.unsqueeze(torch.linspace(-1, 1, N_SAMPLES), 1)y = x + 0.3 * torch.normal(torch.zeros(N_SAMPLES, 1), torch.ones(N_SAMPLES, 1))x, y = Variable(x), Variable(y)# test datatest_x = torch.unsqueeze(torch.linspace(-1, 1, N_SAMPLES), 1)test_y = test_x + 0.3 * torch.normal(torch.zeros(N_SAMPLES, 1), torch.ones(N_SAMPLES, 1))test_x = Variable(test_x, volatile=True)test_y = Variable(test_y, volatile=True)# show data# plt.scatter(x.data.numpy(), y.data.numpy(), c="magenta", s=50, alpha=0.5, label="train")# plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c="cyan", s=50, alpha=0.5, label="test")# plt.legend(loc="upper left")# plt.ylim((-2.5, 2.5))# plt.show()net_overfitting = torch.nn.Sequential( torch.nn.Linear(1, N_HIDDEN), torch.nn.ReLU(), torch.nn.Linear(N_HIDDEN, N_HIDDEN), torch.nn.ReLU(), torch.nn.Linear(N_HIDDEN, 1),)net_dropped = torch.nn.Sequential( torch.nn.Linear(1, N_HIDDEN), torch.nn.Dropout(0.5), torch.nn.ReLU(), torch.nn.Linear(N_HIDDEN, N_HIDDEN), torch.nn.Dropout(0.5), torch.nn.ReLU(), torch.nn.Linear(N_HIDDEN, 1),)print(net_overfitting)print(net_dropped)optimizer_ofit = torch.optim.Adam( net_overfitting.parameters(), lr = 0.01,)optimizer_drop = torch.optim.Adam( net_dropped.parameters(), lr = 0.01,)loss_func = torch.nn.MSELoss()plt.ion()for t in range(500): pred_ofit = net_overfitting(x) pred_drop = net_dropped(x) loss_ofit = loss_func(pred_ofit, y) loss_drop = loss_func(pred_drop, y) optimizer_ofit.zero_grad() optimizer_drop.zero_grad() loss_ofit.backward() loss_drop.backward() optimizer_ofit.step() optimizer_drop.step() if t % 10 == 0: net_overfitting.eval() net_dropped.eval() plt.cla() test_pred_ofit = net_overfitting(test_x) test_pred_drop = net_dropped(test_x) plt.scatter(x.data.numpy(), y.data.numpy(), c="magenta", s=50, alpha=0.3, label="train") plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c="cyan", s=50, alpha=0.3, label="test") plt.plot(test_x.data.numpy(), test_pred_ofit.data.numpy(), "r-", lw=3, label="overfitting") plt.plot(test_x.data.numpy(), test_pred_drop.data.numpy(), "b--", lw=3, label="dropout(50%)") plt.text(0, -1.2, "overfitting loss=%.4f" % loss_func(test_pred_ofit, test_y).data[0], fontdict={"size": 20, "color": "red"}) plt.text(0, -1.5, "dropout loss=%.4f" % loss_func(test_pred_drop, test_y).data[0], fontdict={"size": 20, "color": "blue"}) plt.legend(loc="upper left"); plt.ylim((-2.5, 2.5));plt.pause(0.1) net_overfitting.train() net_dropped.train()plt.ioff()plt.show()

补充:pytorch避免过拟合-dropout丢弃法的实现 。

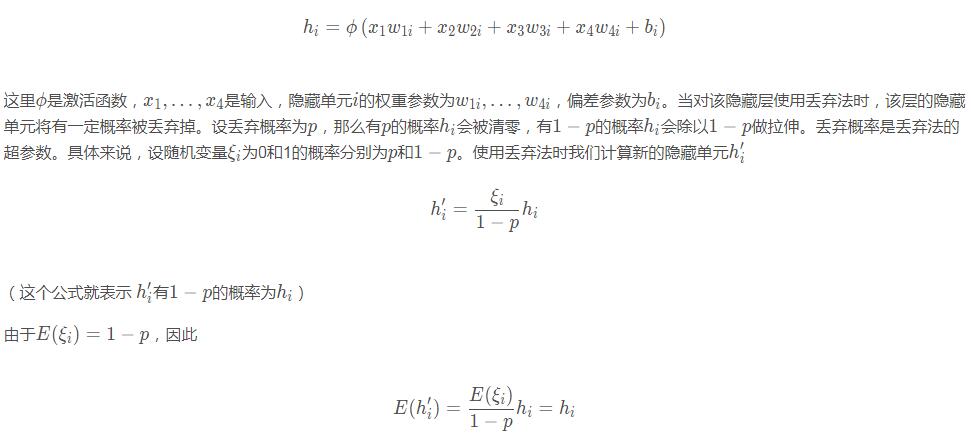

对于一个单隐藏层的多层感知机,其中输入个数为4,隐藏单元个数为5,且隐藏单元的计算表达式为:

%matplotlib inlineimport torchimport torch.nn as nnimport numpy as npdef dropout(X, drop_prob): X = X.float() assert 0 <= drop_prob <= 1 keep_prob = 1 - drop_prob # 这种情况下把全部元素都丢弃 if keep_prob == 0: return torch.zeros_like(X) mask = (torch.rand(X.shape) < keep_prob).float() return mask * X / keep_prob

num_inputs, num_outputs, num_hiddens1, num_hiddens2 = 784, 10, 256, 256W1 = torch.tensor(np.random.normal(0, 0.01, size=(num_inputs, num_hiddens1)), dtype=torch.float, requires_grad=True)b1 = torch.zeros(num_hiddens1, requires_grad=True)W2 = torch.tensor(np.random.normal(0, 0.01, size=(num_hiddens1, num_hiddens2)), dtype=torch.float, requires_grad=True)b2 = torch.zeros(num_hiddens2, requires_grad=True)W3 = torch.tensor(np.random.normal(0, 0.01, size=(num_hiddens2, num_outputs)), dtype=torch.float, requires_grad=True)b3 = torch.zeros(num_outputs, requires_grad=True)params = [W1, b1, W2, b2, W3, b3]

定义模型将全连接层和激活函数ReLU串起来,并对每个激活函数的输出使用丢弃法.

分别设置各个层的丢弃概率。通常的建议是把靠近输入层的丢弃概率设得小一点.

在这个实验中,我们把第一个隐藏层的丢弃概率设为0.2,把第二个隐藏层的丢弃概率设为0.5.

我们可以通过参数is_training来判断运行模式为训练还是测试,并只在训练模式下使用丢弃法.

drop_prob1, drop_prob2 = 0.2, 0.5def net(X, is_training=True): X = X.view(-1, num_inputs) H1 = (torch.matmul(X, W1) + b1).relu() if is_training: # 只在训练模型时使用丢弃法 H1 = dropout(H1, drop_prob1) # 在第一层全连接后添加丢弃层 H2 = (torch.matmul(H1, W2) + b2).relu() if is_training: H2 = dropout(H2, drop_prob2) # 在第二层全连接后添加丢弃层 return torch.matmul(H2, W3) + b3def evaluate_accuracy(data_iter, net): acc_sum, n = 0.0, 0 for X, y in data_iter: if isinstance(net, torch.nn.Module): net.eval() # 评估模式, 这会关闭dropout acc_sum += (net(X).argmax(dim=1) == y).float().sum().item() net.train() # 改回训练模式 else: # 自定义的模型 if("is_training" in net.__code__.co_varnames): # 如果有is_training这个参数 # 将is_training设置成False acc_sum += (net(X, is_training=False).argmax(dim=1) == y).float().sum().item() else: acc_sum += (net(X).argmax(dim=1) == y).float().sum().item() n += y.shape[0] return acc_sum / n

num_epochs, lr, batch_size = 5, 100.0, 256loss = torch.nn.CrossEntropyLoss()def load_data_fashion_mnist(batch_size, resize=None, root="~/Datasets/FashionMNIST"): """Download the fashion mnist dataset and then load into memory.""" trans = [] if resize: trans.append(torchvision.transforms.Resize(size=resize)) trans.append(torchvision.transforms.ToTensor()) transform = torchvision.transforms.Compose(trans) mnist_train = torchvision.datasets.FashionMNIST(root=root, train=True, download=True, transform=transform) mnist_test = torchvision.datasets.FashionMNIST(root=root, train=False, download=True, transform=transform) if sys.platform.startswith("win"): num_workers = 0 # 0表示不用额外的进程来加速读取数据 else: num_workers = 4 train_iter = torch.utils.data.DataLoader(mnist_train, batch_size=batch_size, shuffle=True, num_workers=num_workers) test_iter = torch.utils.data.DataLoader(mnist_test, batch_size=batch_size, shuffle=False, num_workers=num_workers) return train_iter, test_iterdef train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, params=None, lr=None, optimizer=None): for epoch in range(num_epochs): train_l_sum, train_acc_sum, n = 0.0, 0.0, 0 for X, y in train_iter: y_hat = net(X) l = loss(y_hat, y).sum() # 梯度清零 if optimizer is not None: optimizer.zero_grad() elif params is not None and params[0].grad is not None: for param in params: param.grad.data.zero_() l.backward() if optimizer is None: sgd(params, lr, batch_size) else: optimizer.step() # “softmax回归的简洁实现”一节将用到 train_l_sum += l.item() train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item() n += y.shape[0] test_acc = evaluate_accuracy(test_iter, net) print("epoch %d, loss %.4f, train acc %.3f, test acc %.3f" % (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))train_iter, test_iter = load_data_fashion_mnist(batch_size)train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, params, lr)

以上为个人经验,希望能给大家一个参考,也希望大家多多支持我.

原文链接:https://www.jianshu.com/p/57f4ed660923 。

最后此篇关于pytorch Dropout过拟合的操作的文章就讲到这里了,如果你想了解更多关于pytorch Dropout过拟合的操作的内容请搜索CFSDN的文章或继续浏览相关文章,希望大家以后支持我的博客! 。

我遇到过上述术语,但不确定它们之间的区别。 我的理解是 MC dropout 是正常的 dropout,它在测试期间也是活跃的,允许我们在多次测试运行中得到模型不确定性的估计。至于 channel-w

我正在从 deeplearning.ai 学习神经网络中的正则化类(class)。在 dropout 正则化中,教授说如果应用 dropout,计算的激活值将小于未应用 dropout 时(测试时)。

有两种方法可以执行dropout: torch.nn.Dropout torch.nn.function.Dropout 我问: 它们之间有区别吗? 我什么时候应该使用其中一种而不是另一种? 当我切换

根据此链接,keep_prob 的值必须在 (0,1] 之间: Tensorflow manual 否则我会得到值错误: ValueError: If keep_prob is not in (0,

我想在训练时从每个批处理的顺序 Keras 模型中的 dropout 层中提取并存储 dropout mask [1/0 数组]。我想知道在 Keras 中是否有一种直接的方法可以做到这一点,或者我是

来自 Keras 文档: dropout:在 0 和 1 之间 float 。要丢弃的单位分数 输入的线性变换。 recurrent_dropout:在 0 和 1 之间 float 。 drop 用

keras中的Dropout层与dropout和recurrent_droput参数有什么区别?它们都有相同的目的吗? 示例: model.add(Dropout(0.2)) # layer mod

我很困惑是使用 tf.nn.dropout 还是 tf.layers.dropout。 许多 MNIST CNN 示例似乎使用 tf.nn.droput,将 keep_prop 作为参数之一。 但它与

我目前正在尝试使用 Keras( tensorflow 后端)建立一个(LSTM)循环神经网络。我想使用带有 MC Dropout 的变分 dropout。我相信变分 dropout 已经通过 LST

tensorflow config dropout wrapper具有可以设置的三种不同的丢失概率:input_keep_prob、output_keep_prob、state_keep_prob。

tensorflow config dropout wrapper具有可以设置的三种不同的丢失概率:input_keep_prob、output_keep_prob、state_keep_prob。

我想在我的网络中添加 word dropout,以便我可以有足够的训练示例来训练“unk”标记的嵌入。据我所知,这是标准做法。假设unk token的索引为0,padding的索引为1(方便的话我们可

dropout 层只应该在模型训练期间使用,而不是在测试期间使用。 如果我的 Keras 序列模型中有一个 dropout 层,我是否需要在做之前做一些事情来删除或沉默它 model.predict(

我试图了解辍学对验证平均绝对误差(非线性回归问题)的影响。 无辍学 辍学率为 0.05 辍学率为 0.075 在没有任何 dropouts 的情况下,验证损失大于训练损失,如1所示。我的理解是,验证损

玩具回归示例。使用 dropout=0.0 这很好用并且成本降低了。使用 dropout=0.5 我得到错误: ValueError: Got num_leading_axes=1 for a 1-d

如何在训练期间更改 Dropout?例如 Dropout= [0.1, 0.2, 0.3] 我尝试将其作为列表传递,但我无法使其工作。 最佳答案 要在训练过程中改变 dropout 概率,您应该使用

我有一个用多个 LayerNormalization 层训练的模型,我不确定在激活 dropout 进行预测时简单的权重转移是否正常工作。这是我正在使用的代码: from tensorflow.ker

我正在训练一个带有 dropout 的神经网络。碰巧的是,当我将 dropout 从 0.9 减少到 0.7 时,训练数据数据的损失(交叉验证错误)也会减少。我还注意到,随着我减少 dropout 参

根据 Keras 文档,dropout 层在训练和测试阶段表现出不同的行为: Note that if your model has a different behavior in training

我已经在多个地方看到您应该在验证和测试阶段禁用 dropout,并且只在训练阶段保留它。有什么理由让这种情况发生吗?我一直找不到一个很好的理由,只是想知道。 我问的一个原因是因为我训练了一个带有 dr

我是一名优秀的程序员,十分优秀!