- ubuntu12.04环境下使用kvm ioctl接口实现最简单的虚拟机

- Ubuntu 通过无线网络安装Ubuntu Server启动系统后连接无线网络的方法

- 在Ubuntu上搭建网桥的方法

- ubuntu 虚拟机上网方式及相关配置详解

CFSDN坚持开源创造价值,我们致力于搭建一个资源共享平台,让每一个IT人在这里找到属于你的精彩世界.

这篇CFSDN的博客文章python实现C4.5决策树算法由作者收集整理,如果你对这篇文章有兴趣,记得点赞哟.

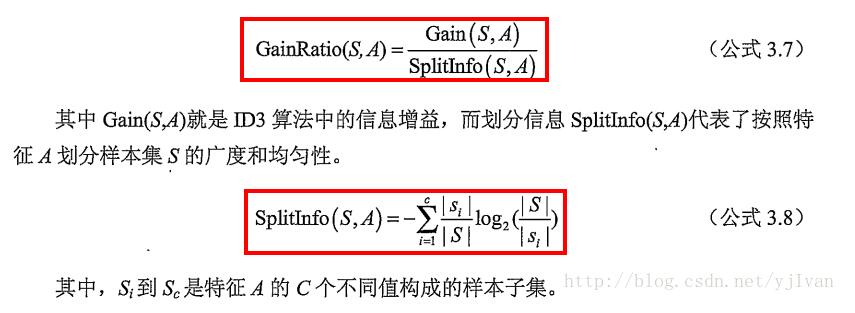

C4.5算法使用信息增益率来代替ID3的信息增益进行特征的选择,克服了信息增益选择特征时偏向于特征值个数较多的不足。信息增益率的定义如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

|

# -*- coding: utf-8 -*-

from

numpy

import

*

import

math

import

copy

import

cPickle as pickle

class

C45DTree(

object

):

def

__init__(

self

):

# 构造方法

self

.tree

=

{}

# 生成树

self

.dataSet

=

[]

# 数据集

self

.labels

=

[]

# 标签集

# 数据导入函数

def

loadDataSet(

self

, path, labels):

recordList

=

[]

fp

=

open

(path,

"rb"

)

# 读取文件内容

content

=

fp.read()

fp.close()

rowList

=

content.splitlines()

# 按行转换为一维表

recordList

=

[row.split(

"\t"

)

for

row

in

rowList

if

row.strip()]

# strip()函数删除空格、Tab等

self

.dataSet

=

recordList

self

.labels

=

labels

# 执行决策树函数

def

train(

self

):

labels

=

copy.deepcopy(

self

.labels)

self

.tree

=

self

.buildTree(

self

.dataSet, labels)

# 构件决策树:穿件决策树主程序

def

buildTree(

self

, dataSet, lables):

cateList

=

[data[

-

1

]

for

data

in

dataSet]

# 抽取源数据集中的决策标签列

# 程序终止条件1:如果classList只有一种决策标签,停止划分,返回这个决策标签

if

cateList.count(cateList[

0

])

=

=

len

(cateList):

return

cateList[

0

]

# 程序终止条件2:如果数据集的第一个决策标签只有一个,返回这个标签

if

len

(dataSet[

0

])

=

=

1

:

return

self

.maxCate(cateList)

# 核心部分

bestFeat, featValueList

=

self

.getBestFeat(dataSet)

# 返回数据集的最优特征轴

bestFeatLabel

=

lables[bestFeat]

tree

=

{bestFeatLabel: {}}

del

(lables[bestFeat])

for

value

in

featValueList:

# 决策树递归生长

subLables

=

lables[:]

# 将删除后的特征类别集建立子类别集

# 按最优特征列和值分隔数据集

splitDataset

=

self

.splitDataSet(dataSet, bestFeat, value)

subTree

=

self

.buildTree(splitDataset, subLables)

# 构建子树

tree[bestFeatLabel][value]

=

subTree

return

tree

# 计算出现次数最多的类别标签

def

maxCate(

self

, cateList):

items

=

dict

([(cateList.count(i), i)

for

i

in

cateList])

return

items[

max

(items.keys())]

# 计算最优特征

def

getBestFeat(

self

, dataSet):

Num_Feats

=

len

(dataSet[

0

][:

-

1

])

totality

=

len

(dataSet)

BaseEntropy

=

self

.computeEntropy(dataSet)

ConditionEntropy

=

[]

# 初始化条件熵

slpitInfo

=

[]

# for C4.5,caculate gain ratio

allFeatVList

=

[]

for

f

in

xrange

(Num_Feats):

featList

=

[example[f]

for

example

in

dataSet]

[splitI, featureValueList]

=

self

.computeSplitInfo(featList)

allFeatVList.append(featureValueList)

slpitInfo.append(splitI)

resultGain

=

0.0

for

value

in

featureValueList:

subSet

=

self

.splitDataSet(dataSet, f, value)

appearNum

=

float

(

len

(subSet))

subEntropy

=

self

.computeEntropy(subSet)

resultGain

+

=

(appearNum

/

totality)

*

subEntropy

ConditionEntropy.append(resultGain)

# 总条件熵

infoGainArray

=

BaseEntropy

*

ones(Num_Feats)

-

array(ConditionEntropy)

infoGainRatio

=

infoGainArray

/

array(slpitInfo)

# C4.5信息增益的计算

bestFeatureIndex

=

argsort(

-

infoGainRatio)[

0

]

return

bestFeatureIndex, allFeatVList[bestFeatureIndex]

# 计算划分信息

def

computeSplitInfo(

self

, featureVList):

numEntries

=

len

(featureVList)

featureVauleSetList

=

list

(

set

(featureVList))

valueCounts

=

[featureVList.count(featVec)

for

featVec

in

featureVauleSetList]

pList

=

[

float

(item)

/

numEntries

for

item

in

valueCounts]

lList

=

[item

*

math.log(item,

2

)

for

item

in

pList]

splitInfo

=

-

sum

(lList)

return

splitInfo, featureVauleSetList

# 计算信息熵

# @staticmethod

def

computeEntropy(

self

, dataSet):

dataLen

=

float

(

len

(dataSet))

cateList

=

[data[

-

1

]

for

data

in

dataSet]

# 从数据集中得到类别标签

# 得到类别为key、 出现次数value的字典

items

=

dict

([(i, cateList.count(i))

for

i

in

cateList])

infoEntropy

=

0.0

for

key

in

items:

# 香农熵: = -p*log2(p) --infoEntropy = -prob * log(prob, 2)

prob

=

float

(items[key])

/

dataLen

infoEntropy

-

=

prob

*

math.log(prob,

2

)

return

infoEntropy

# 划分数据集: 分割数据集; 删除特征轴所在的数据列,返回剩余的数据集

# dataSet : 数据集; axis: 特征轴; value: 特征轴的取值

def

splitDataSet(

self

, dataSet, axis, value):

rtnList

=

[]

for

featVec

in

dataSet:

if

featVec[axis]

=

=

value:

rFeatVec

=

featVec[:axis]

# list操作:提取0~(axis-1)的元素

rFeatVec.extend(featVec[axis

+

1

:])

# 将特征轴之后的元素加回

rtnList.append(rFeatVec)

return

rtnList

# 存取树到文件

def

storetree(

self

, inputTree, filename):

fw

=

open

(filename,

'w'

)

pickle.dump(inputTree, fw)

fw.close()

# 从文件抓取树

def

grabTree(

self

, filename):

fr

=

open

(filename)

return

pickle.load(fr)

|

调用代码 。

|

1

2

3

4

5

6

7

8

9

10

11

12

|

# -*- coding: utf-8 -*-

from

numpy

import

*

from

C45DTree

import

*

dtree

=

C45DTree()

dtree.loadDataSet(

"dataset.dat"

,[

"age"

,

"revenue"

,

"student"

,

"credit"

])

dtree.train()

dtree.storetree(dtree.tree,

"data.tree"

)

mytree

=

dtree.grabTree(

"data.tree"

)

print

mytree

|

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持我.

原文链接:https://blog.csdn.net/yjIvan/article/details/71272968 。

最后此篇关于python实现C4.5决策树算法的文章就讲到这里了,如果你想了解更多关于python实现C4.5决策树算法的内容请搜索CFSDN的文章或继续浏览相关文章,希望大家以后支持我的博客! 。

关于 B 树与 B+ 树,网上有一个比较经典的问题:为什么 MongoDb 使用 B 树,而 MySQL 索引使用 B+ 树? 但实际上 MongoDb 真的用的是 B 树吗?

如何将 R* Tree 实现为持久(基于磁盘)树?保存 R* 树索引或保存叶值的文件的体系结构是什么? 注意:此外,如何在这种持久性 R* 树中执行插入、更新和删除操作? 注意事项二:我已经实现了一个

目前,我正在努力用 Java 表示我用 SML 编写的 AST 树,这样我就可以随时用 Java 遍历它。 我想知道是否应该在 Java 中创建一个 Node 类,其中包含我想要表示的数据,以及一个数

我之前用过这个库http://www.cs.umd.edu/~mount/ANN/ .但是,它们不提供范围查询实现。我猜是否有一个 C++ 范围查询实现(圆形或矩形),用于查询二维数据。 谢谢。 最佳

在进一步分析为什么MySQL数据库索引选择使用B+树之前,我相信很多小伙伴对数据结构中的树还是有些许模糊的,因此我们由浅入深一步步探讨树的演进过程,在一步步引出B树以及为什么MySQL数据库索引选择

书接上回,今天和大家一起动手来自己实现树。 相信通过前面的章节学习,大家已经明白树是什么了,今天我们主要针对二叉树,分别使用顺序存储和链式存储来实现树。 01、数组实现 我们在上一节中说过,

书节上回,我们接着聊二叉树,N叉树,以及树的存储。 01、满二叉树 如果一个二叉树,除最后一层节点外,每一层的节点数都达到最大值,即每个节点都有两个子节点,同时所有叶子节点都在最后一层,则这个

树是一种非线性数据结构,是以分支关系定义的层次结构,因此形态上和自然界中的倒挂的树很像,而数据结构中树根向上树叶向下。 什么是树? 01、定义 树是由n(n>=0)个元素节点组成的

操作系统的那棵“树” 今天从一颗 开始,我们看看如何从小树苗长成一颗苍天大树。 运转CPU CPU运转起来很简单,就是不断的从内存取值执行。 CPU没有好好运转 IO是个耗费时间的活,如果CPU在取值

我想为海洋生物学类(class)制作一个简单的系统发育树作为教育示例。我有一个具有分类等级的物种列表: Group <- c("Benthos","Benthos","Benthos","Be

我从这段代码中删除节点时遇到问题,如果我插入数字 12 并尝试删除它,它不会删除它,我尝试调试,似乎当它尝试删除时,它出错了树的。但是,如果我尝试删除它已经插入主节点的节点,它将删除它,或者我插入数字

B+ 树的叶节点链接在一起。将 B+ 树的指针结构视为有向图,它不是循环的。但是忽略指针的方向并将其视为链接在一起的无向叶节点会在图中创建循环。 在 Haskell 中,如何将叶子构造为父内部节点的子

我在 GWT 中使用树控件。我有一个自定义小部件,我将其添加为 TreeItem: Tree testTree = new Tree(); testTree.addItem(myWidget); 我想

它有点像混合树/链表结构。这是我定义结构的方式 struct node { nodeP sibling; nodeP child; nodeP parent; char

我编写了使用队列遍历树的代码,但是下面的出队函数生成错误,head = p->next 是否有问题?我不明白为什么这部分是错误的。 void Levelorder(void) { node *tmp,

例如,我想解析以下数组: var array1 = ["a.b.c.d", "a.e.f.g", "a.h", "a.i.j", "a.b.k"] 进入: var json1 = { "nod

问题 -> 给定一棵二叉树和一个和,确定该树是否具有从根到叶的路径,使得沿路径的所有值相加等于给定的和。 我的解决方案 -> public class Solution { public bo

我有一个创建 java 树的任务,它包含三列:运动名称、运动类别中的运动计数和上次更新。类似的东西显示在下面的图像上: 如您所见,有 4 种运动:水上运动、球类运动、跳伞运动和舞蹈运动。当我展开 sk

我想在 H2 数据库中实现 B+ Tree,但我想知道,B+ Tree 功能在 H2 数据库中可用吗? 最佳答案 H2 已经使用了 B+ 树(PageBtree 类)。 关于mysql - H2数据库

假设我们有 5 个字符串数组: String[] array1 = {"hello", "i", "cat"}; String[] array2 = {"hello", "i", "am"}; Str

我是一名优秀的程序员,十分优秀!