- Java锁的逻辑(结合对象头和ObjectMonitor)

- 还在用饼状图?来瞧瞧这些炫酷的百分比可视化新图形(附代码实现)⛵

- 自动注册实体类到EntityFrameworkCore上下文,并适配ABP及ABPVNext

- 基于Sklearn机器学习代码实战

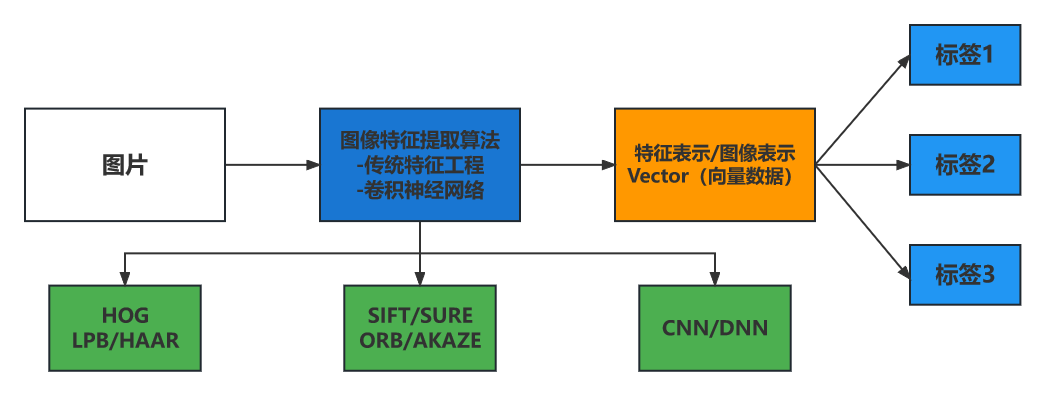

图像特征表示是该图像唯一的表述,是图像的DNA 。

图像分类、对象识别、特征检测、图像对齐/匹配、对象检测、图像搜索/比对 。

什么是角点 。

各个方向的梯度变化 。

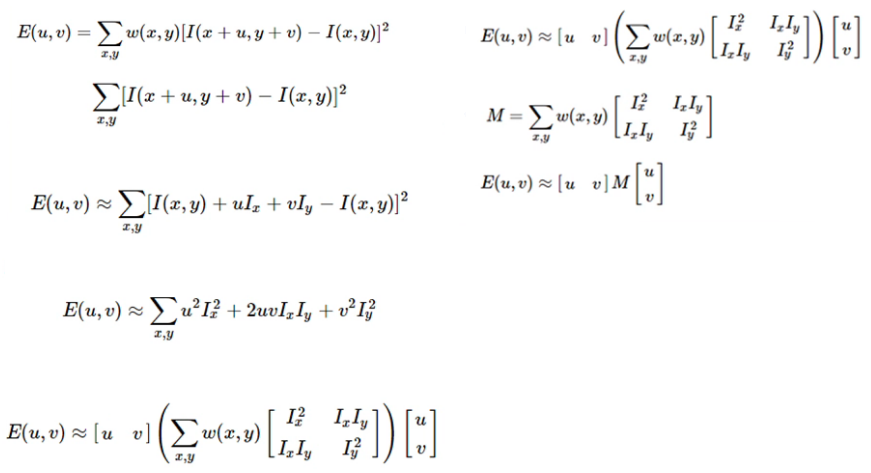

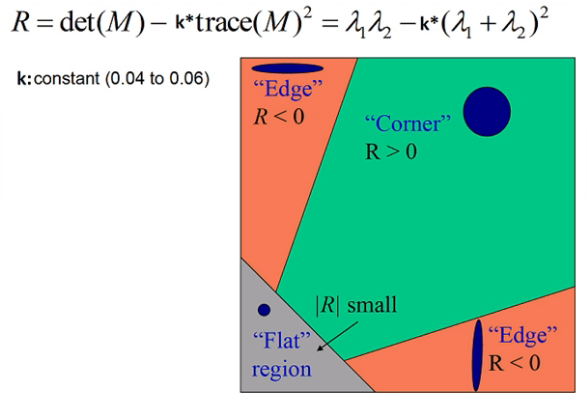

Harris角点检测算法 。

//函数说明:

void cv::cornerHarris(

InputArray src, //输入

OutputArray dst, //输出

int blockSize, //块大小

int ksize, //Sobel

double k, //常量系数

int borderType = BORDER_DEFAULT //

)

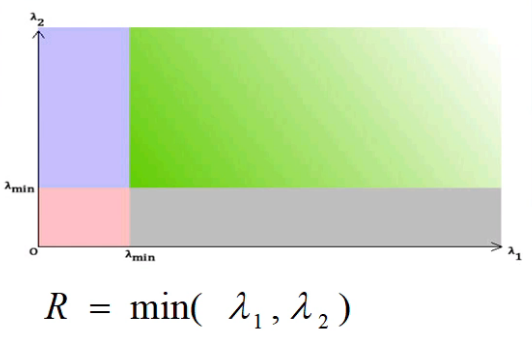

Shi-tomas角点检测算法 。

//函数说明:

void cv::goodFeaturesToTrack(

InputArray image, //输入图像

OutputArray corners, //输出的角点坐标

int maxCorners, //最大角点数目

double qualityLevel, //质量控制,即λ1与λ2的最小阈值

double minDistance, //重叠控制,忽略多少像素值范围内重叠的角点

InputArray mask = noArray(),

int blockSize = 3,

bool useHarrisDetector = false,

double k = 0.04

)

代码实现 。

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

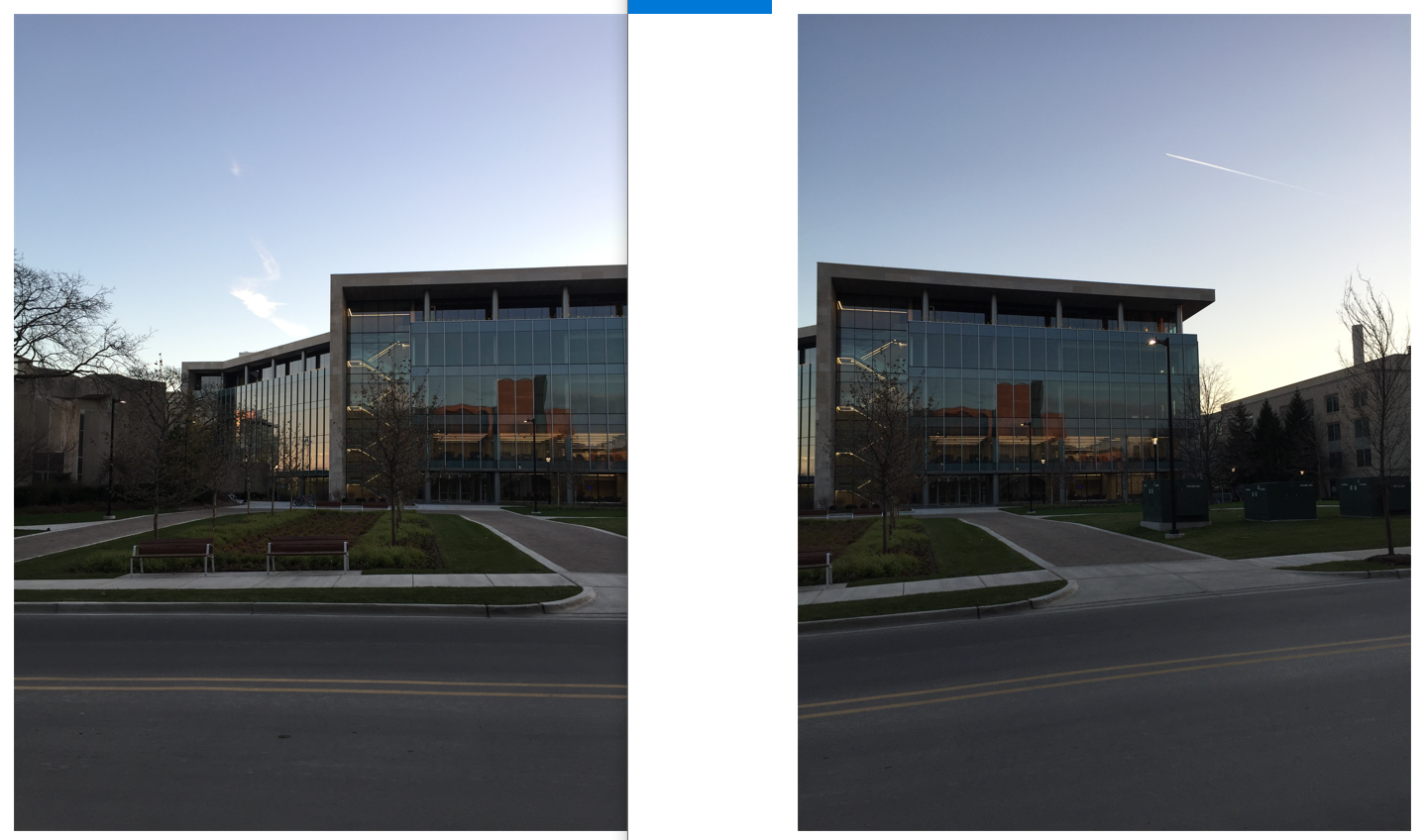

Mat src = imread("D:/images/building.png");

Mat gray;

cvtColor(src, gray, COLOR_BGR2GRAY);

namedWindow("src", WINDOW_FREERATIO);

imshow("src", src);

RNG rng(12345);

vector<Point> points;

goodFeaturesToTrack(gray, points, 400, 0.05, 10);

for (size_t t = 0; t < points.size(); t++) {

circle(src, points[t], 3, Scalar(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255)), 1.5, LINE_AA);

}

namedWindow("out", WINDOW_FREERATIO);

imshow("out", src);

waitKey(0);

destroyAllWindows();

return 0;

}

效果:

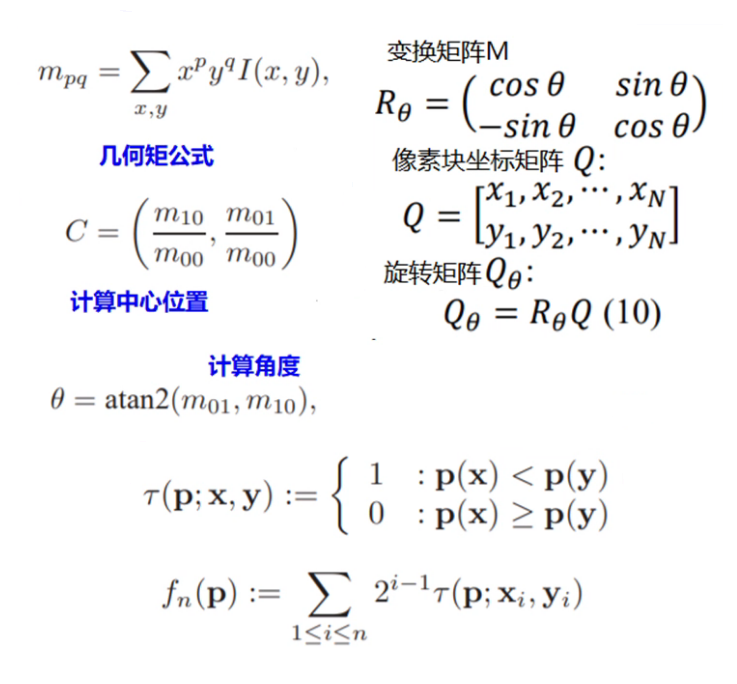

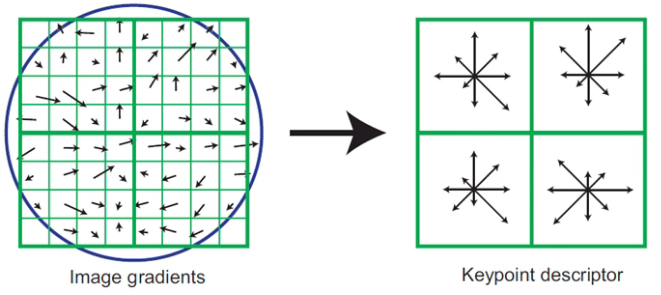

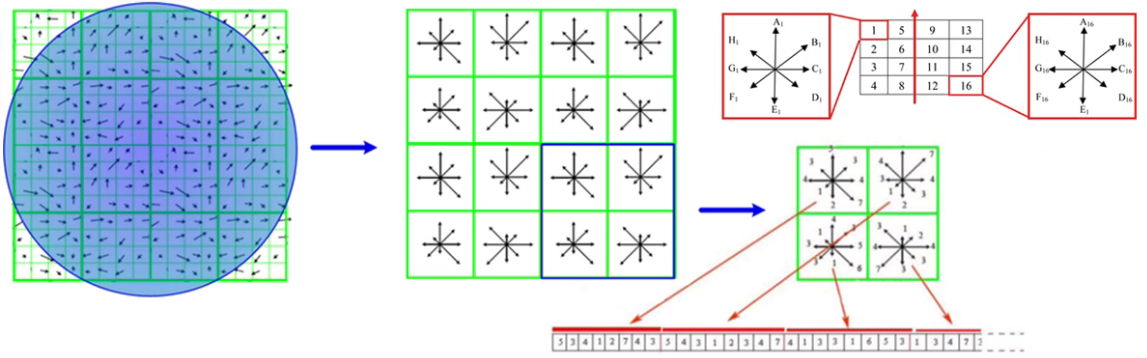

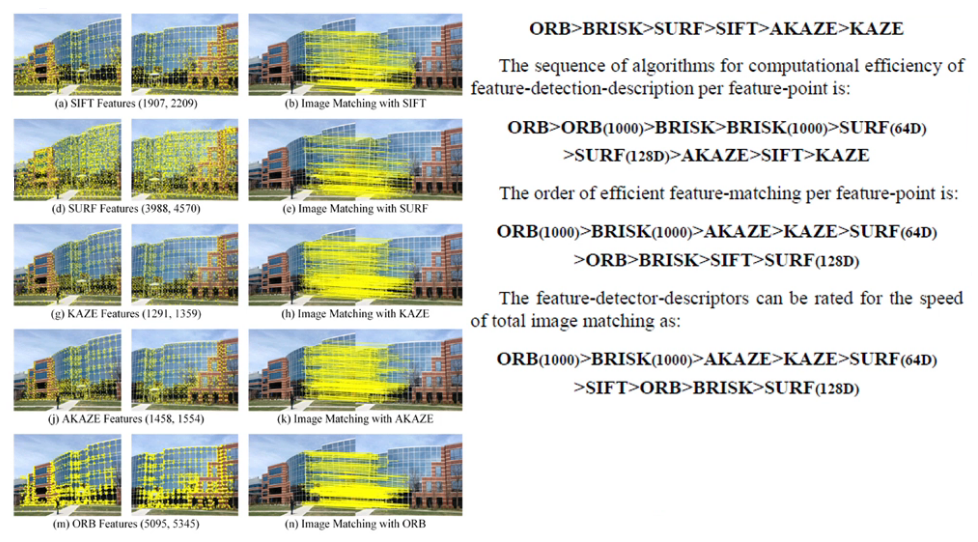

ORB算法由两个部分组成:快速关键点定位+BRIEF描述子生成 。

Fast关键点检测:选择当前像素点P,阈值T,周围16个像素点,超过连续N=12个像素点大于或者小于P,Fast1:优先检测1、5、9、13,循环所有像素点 。

//ORB对象创建

Orb = cv::ORB::create(500)

virtual void cv::Feature2D::detect(

InputArray image, //输入图像

std::vector<KeyPoint>& keypoints, //关键点

InputArray mask = noArray() //支持mask

)

KeyPoint数据结构-四个最重要属性:

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/building.png");

imshow("input", src);

//Mat gray;

//cvtColor(src, gray, COLOR_BGR2GRAY);

auto orb = ORB::create(500);

vector<KeyPoint> kypts;

orb->detect(src, kypts);

Mat result01, result02, result03;

drawKeypoints(src, kypts, result01, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

drawKeypoints(src, kypts, result02, Scalar::all(-1), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

imshow("ORB关键点检测default", result01);

imshow("ORB关键点检测rich", result02);

waitKey(0);

destroyAllWindows();

return 0;

}

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/building.png");

imshow("input", src);

//Mat gray;

//cvtColor(src, gray, COLOR_BGR2GRAY);

auto orb = ORB::create(500); //获取500个关键点,每个关键点计算一个orb特征描述子

vector<KeyPoint> kypts;

orb->detect(src, kypts);

Mat result01, result02, result03;

//drawKeypoints(src, kypts, result01, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

//不同半径代表不同层级高斯金字塔中的关键点,即图像不同尺度中的关键点

drawKeypoints(src, kypts, result02, Scalar::all(-1), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

Mat desc_orb;

orb->compute(src, kypts, desc_orb);

std::cout << desc_orb.rows << " x " << desc_orb.cols << std::endl;

//imshow("ORB关键点检测default", result01);

imshow("ORB关键点检测rich", result02);

waitKey(0);

destroyAllWindows();

return 0;

}

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("D:/images/building.png");

imshow("input", src);

//Mat gray;

//cvtColor(src, gray, COLOR_BGR2GRAY);

auto sift = SIFT::create(500);

vector<KeyPoint> kypts;

sift->detect(src, kypts);

Mat result01, result02, result03;

//drawKeypoints(src, kypts, result01, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

drawKeypoints(src, kypts, result02, Scalar::all(-1), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

std::cout << kypts.size() << std::endl;

for (int i = 0; i < kypts.size(); i++) {

std::cout << "pt: " << kypts[i].pt << " angle: " << kypts[i].angle << " size: " << kypts[i].size << std::endl;

}

Mat desc_orb;

sift->compute(src, kypts, desc_orb);

std::cout << desc_orb.rows << " x " << desc_orb.cols << std::endl;

//imshow("ORB关键点检测default", result01);

imshow("ORB关键点检测rich", result02);

waitKey(0);

destroyAllWindows();

return 0;

}

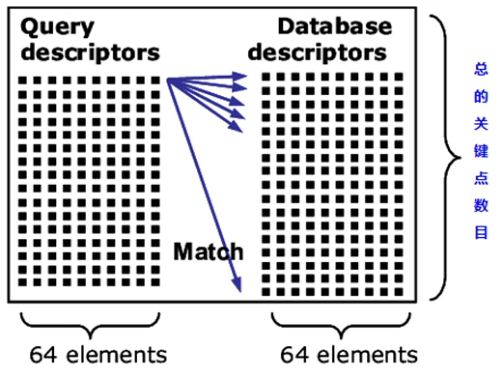

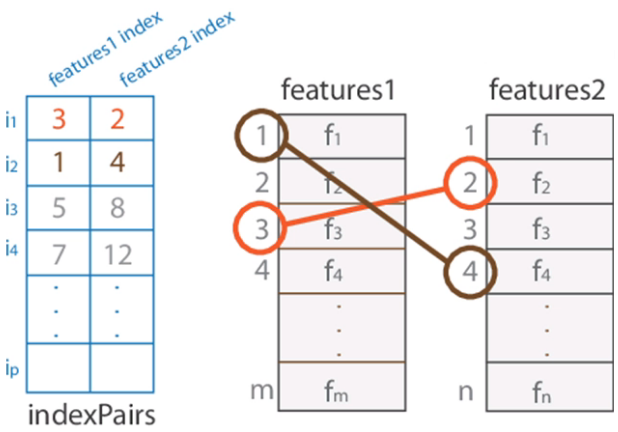

暴力匹配,全局搜索,计算最小距离,返回相似描述子合集 。

FLANN匹配,2009年发布的开源高维数据匹配算法库,全称Fast Library for Approximate Nearest Neighbors 。

支持KMeans、KDTree、KNN、多探针LSH等搜索与匹配算法 。

| 描述子 | 匹配方法 |

|---|---|

| SIFT, SURF, and KAZE | L1 Norm |

| AKAZE, ORB, and BRISK | Hamming distance(二值编码) |

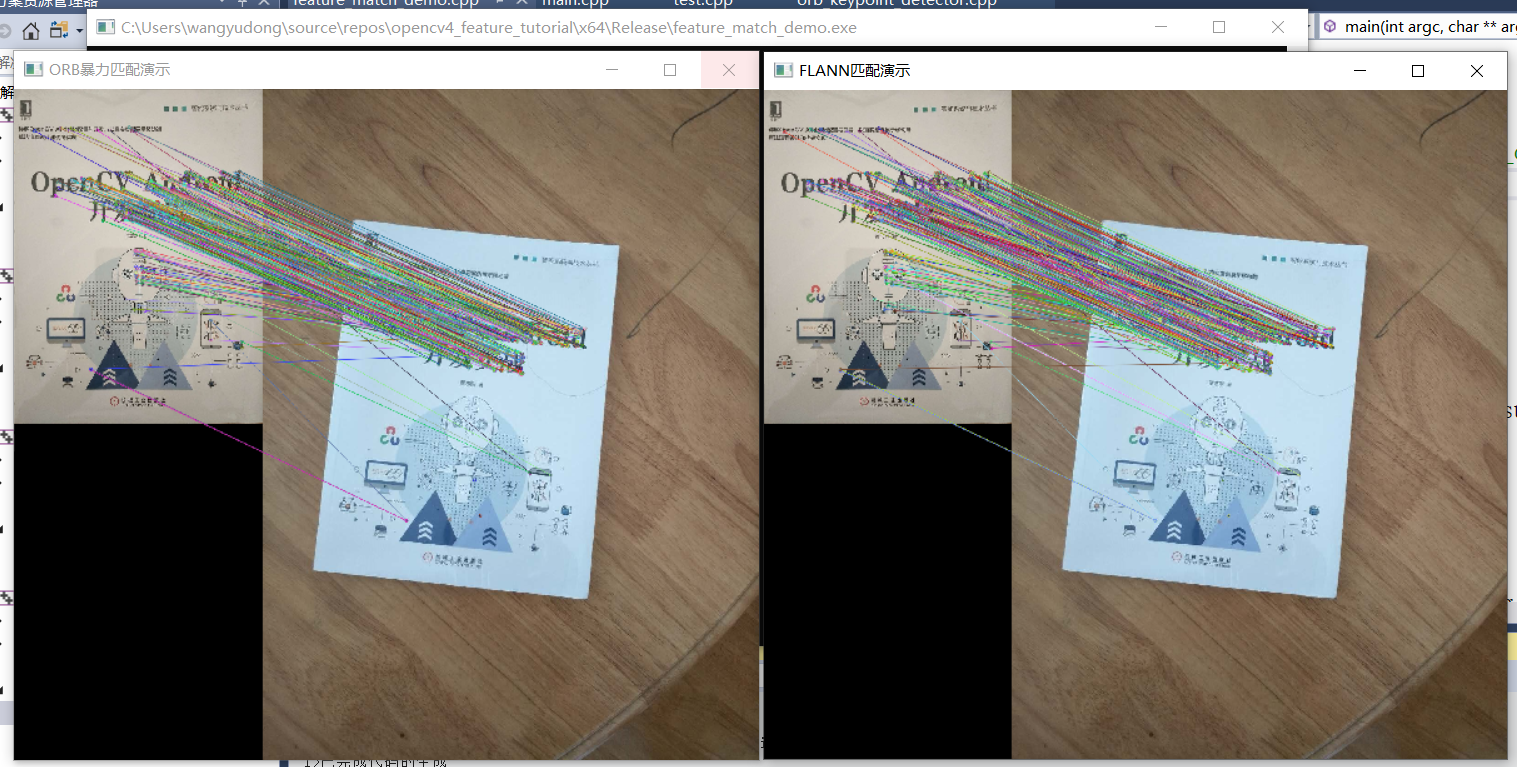

// 暴力匹配

auto bfMatcher = BFMatcher::create(NORM_HAMMING, false);

std::vector<DMatch> matches;

bfMatcher->match(box_descriptors, scene_descriptors, matches);

Mat img_orb_matches;

drawMatches(box, box_kpts, box_in_scene, scene_kpts, matches, img_orb_matches);

imshow("ORB暴力匹配演示", img_orb_matches);

// FLANN匹配

auto flannMatcher = FlannBasedMatcher(new flann::LshIndexParams(6, 12, 2));

flannMatcher.match(box_descriptors, scene_descriptors, matches);

Mat img_flann_matches;

drawMatches(box, box_kpts, box_in_scene, scene_kpts, matches, img_flann_matches);

namedWindow("FLANN匹配演示", WINDOW_FREERATIO);

imshow("FLANN匹配演示", img_flann_matches);

DMatch数据结构:

distance表示距离,值越小表示匹配程度越高.

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat book = imread("D:/images/book.jpg");

Mat book_on_desk = imread("D:/images/book_on_desk.jpg");

imshow("book", book);

//Mat gray;

//cvtColor(src, gray, COLOR_BGR2GRAY);

vector<KeyPoint> kypts_book;

vector<KeyPoint> kypts_book_on_desk;

Mat desc_book, desc_book_on_desk;

//auto orb = ORB::create(500);

//orb->detectAndCompute(book, Mat(), kypts_book, desc_book);

//orb->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

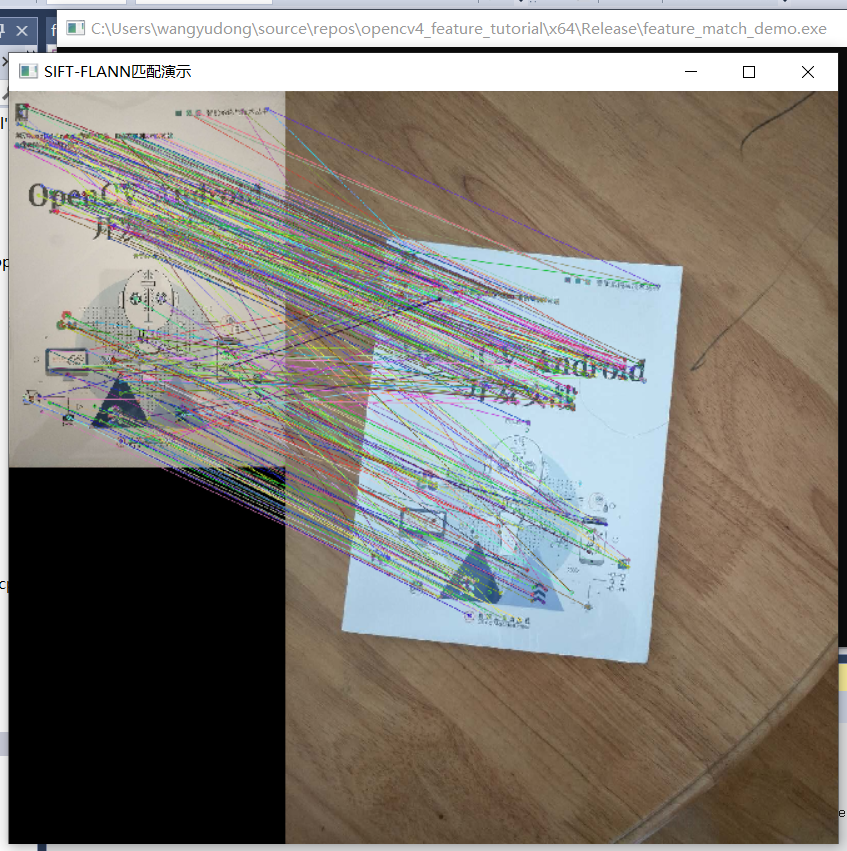

auto sift = SIFT::create(500);

sift->detectAndCompute(book, Mat(), kypts_book, desc_book);

sift->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

Mat result;

vector<DMatch> matches;

//// 暴力匹配

//auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

//bf_matcher->match(desc_book, desc_book_on_desk, matches);

//drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, result);

// FLANN匹配

//auto flannMatcher = FlannBasedMatcher(new flann::LshIndexParams(6, 12, 2));

auto flannMatcher = FlannBasedMatcher();

flannMatcher.match(desc_book, desc_book_on_desk, matches);

Mat img_flann_matches;

drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, img_flann_matches);

namedWindow("SIFT-FLANN匹配演示", WINDOW_FREERATIO);

imshow("SIFT-FLANN匹配演示", img_flann_matches);

//namedWindow("ORB暴力匹配演示", WINDOW_FREERATIO);

//imshow("ORB暴力匹配演示", result);

waitKey(0);

destroyAllWindows();

return 0;

}

Mat cv::findHomography(

InputArray srcPoints, // 输入

InputArray dstPoints, // 输出

int method = 0,

double ransacReprojThreshold = 3,

OuputArray mask = noArray(),

const int maxIters = 2000,

const double confidence = 0.995

)

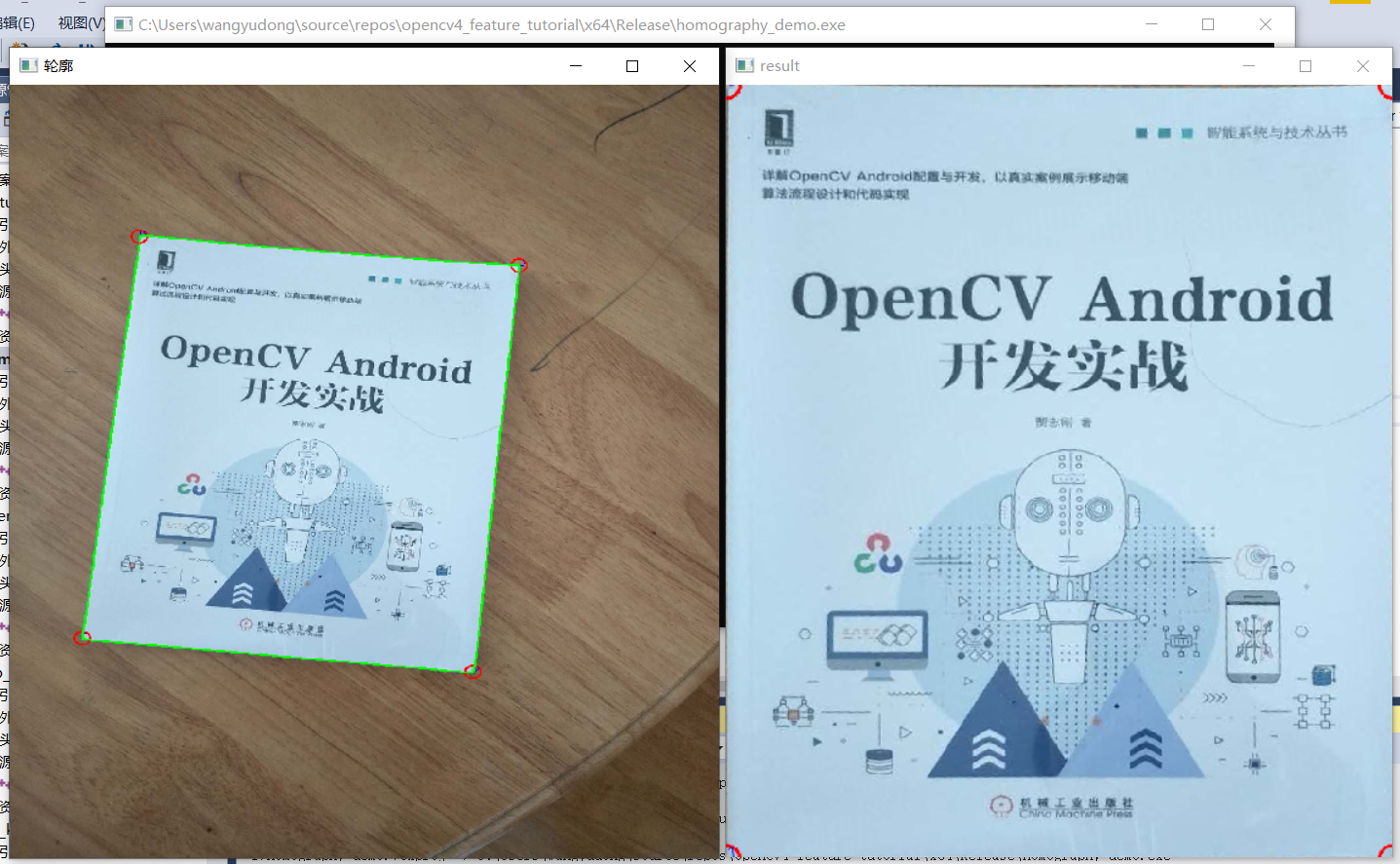

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace std;

using namespace cv;

int main(int argc, char** argv) {

Mat input = imread("D:/images/book_on_desk.jpg");

Mat gray, binary;

cvtColor(input, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

vector<vector<Point>> contours;

vector<Vec4i> hierachy;

findContours(binary, contours, hierachy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

int index = 0;

for (size_t i = 0; i < contours.size(); i++) {

if (contourArea(contours[i]) > contourArea(contours[index])) {

index = i;

}

}

Mat approxCurve;

approxPolyDP(contours[index], approxCurve, contours[index].size() / 10, true);

//imshow("approx", approxCurve);

//std::cout << contours.size() << std::endl;

vector<Point2f> srcPts;

vector<Point2f> dstPts;

for (int i = 0; i < approxCurve.rows; i++) {

Vec2i pt = approxCurve.at<Vec2i>(i, 0);

srcPts.push_back(Point(pt[0], pt[1]));

circle(input, Point(pt[0], pt[1]), 12, Scalar(0, 0, 255), 2, 8, 0);

putText(input, std::to_string(i), Point(pt[0], pt[1]), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(255, 0, 0), 1);

}

dstPts.push_back(Point2f(0, 0));

dstPts.push_back(Point2f(0, 760));

dstPts.push_back(Point2f(585, 760));

dstPts.push_back(Point2f(585, 0));

Mat h = findHomography(srcPts, dstPts, RANSAC); //计算单应性矩阵

Mat result;

warpPerspective(input, result, h, Size(585, 760)); //对原图进行透视变换获得校正后的目标区域

namedWindow("result", WINDOW_FREERATIO);

imshow("result", result);

drawContours(input, contours, index, Scalar(0, 255, 0), 2, 8);

namedWindow("轮廓", WINDOW_FREERATIO);

imshow("轮廓", input);

waitKey(0);

return 0;

}

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

// Mat image = imread("D:/images/butterfly.jpg");

Mat book = imread("D:/images/book.jpg");

Mat book_on_desk = imread("D:/images/book_on_desk.jpg");

namedWindow("book", WINDOW_FREERATIO);

imshow("book", book);

auto orb = ORB::create(500);

vector<KeyPoint> kypts_book;

vector<KeyPoint> kypts_book_on_desk;

Mat desc_book, desc_book_on_desk;

orb->detectAndCompute(book, Mat(), kypts_book, desc_book);

orb->detectAndCompute(book_on_desk, Mat(), kypts_book_on_desk, desc_book_on_desk);

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

vector<DMatch> matches;

bf_matcher->match(desc_book, desc_book_on_desk, matches);

float good_rate = 0.15f;

int num_good_matches = matches.size() * good_rate;

std::cout << num_good_matches << std::endl;

std::sort(matches.begin(), matches.end());

matches.erase(matches.begin() + num_good_matches, matches.end());

drawMatches(book, kypts_book, book_on_desk, kypts_book_on_desk, matches, result);

vector<Point2f> obj_pts;

vector<Point2f> scene_pts;

for (size_t t = 0; t < matches.size(); t++) {

obj_pts.push_back(kypts_book[matches[t].queryIdx].pt);

scene_pts.push_back(kypts_book_on_desk[matches[t].trainIdx].pt);

}

Mat h = findHomography(obj_pts, scene_pts, RANSAC); // 计算单应性矩阵h

vector<Point2f> srcPts;

srcPts.push_back(Point2f(0, 0));

srcPts.push_back(Point2f(book.cols, 0));

srcPts.push_back(Point2f(book.cols, book.rows));

srcPts.push_back(Point2f(0, book.rows));

std::vector<Point2f> dstPts(4);

perspectiveTransform(srcPts, dstPts, h); // 计算转换后书的四个顶点

for (int i = 0; i < 4; i++) {

line(book_on_desk, dstPts[i], dstPts[(i + 1) % 4], Scalar(0, 0, 255), 2, 8, 0);

}

namedWindow("暴力匹配", WINDOW_FREERATIO);

imshow("暴力匹配", result);

namedWindow("对象检测", WINDOW_FREERATIO);

imshow("对象检测", book_on_desk);

//imwrite("D:/object_find.png", book_on_desk);

waitKey(0);

return 0;

}

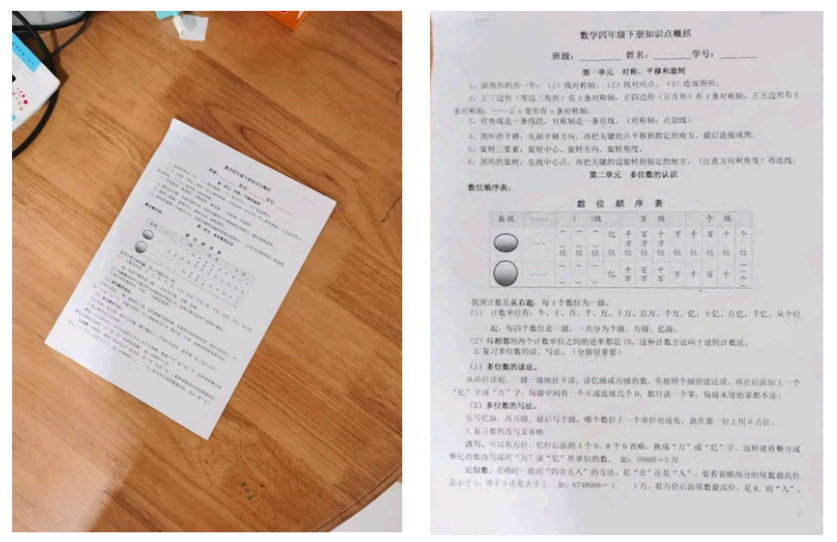

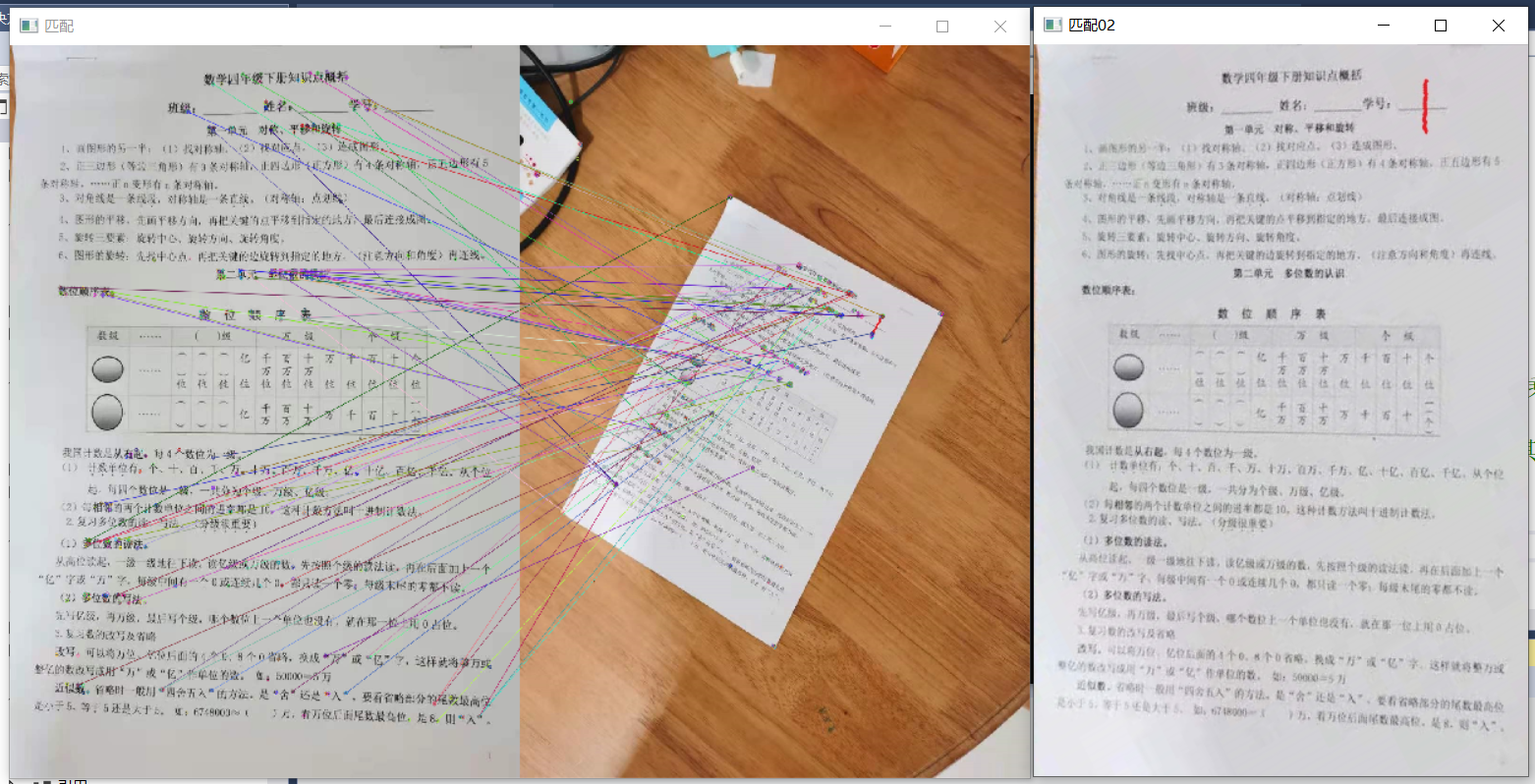

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat ref_img = imread("D:/images/form.png");

Mat img = imread("D:/images/form_in_doc.jpg");

imshow("表单模板", ref_img);

auto orb = ORB::create(500);

vector<KeyPoint> kypts_ref;

vector<KeyPoint> kypts_img;

Mat desc_book, desc_book_on_desk;

orb->detectAndCompute(ref_img, Mat(), kypts_ref, desc_book);

orb->detectAndCompute(img, Mat(), kypts_img, desc_book_on_desk);

Mat result;

auto bf_matcher = BFMatcher::create(NORM_HAMMING, false);

vector<DMatch> matches;

bf_matcher->match(desc_book_on_desk, desc_book, matches);

float good_rate = 0.15f;

int num_good_matches = matches.size() * good_rate;

std::cout << num_good_matches << std::endl;

std::sort(matches.begin(), matches.end());

matches.erase(matches.begin() + num_good_matches, matches.end());

drawMatches(ref_img, kypts_ref, img, kypts_img, matches, result);

imshow("匹配", result);

imwrite("D:/images/result_doc.png", result);

// Extract location of good matches

std::vector<Point2f> points1, points2;

for (size_t i = 0; i < matches.size(); i++)

{

points1.push_back(kypts_img[matches[i].queryIdx].pt);

points2.push_back(kypts_ref[matches[i].trainIdx].pt);

}

Mat h = findHomography(points1, points2, RANSAC); // 尽量用RANSAC,比最小二乘法效果好一些

Mat aligned_doc;

warpPerspective(img, aligned_doc, h, ref_img.size()); // 单应性矩阵h决定了其他无效区域不会被变换,只会变换target区域

imwrite("D:/images/aligned_doc.png", aligned_doc);

waitKey(0);

destroyAllWindows();

return 0;

}

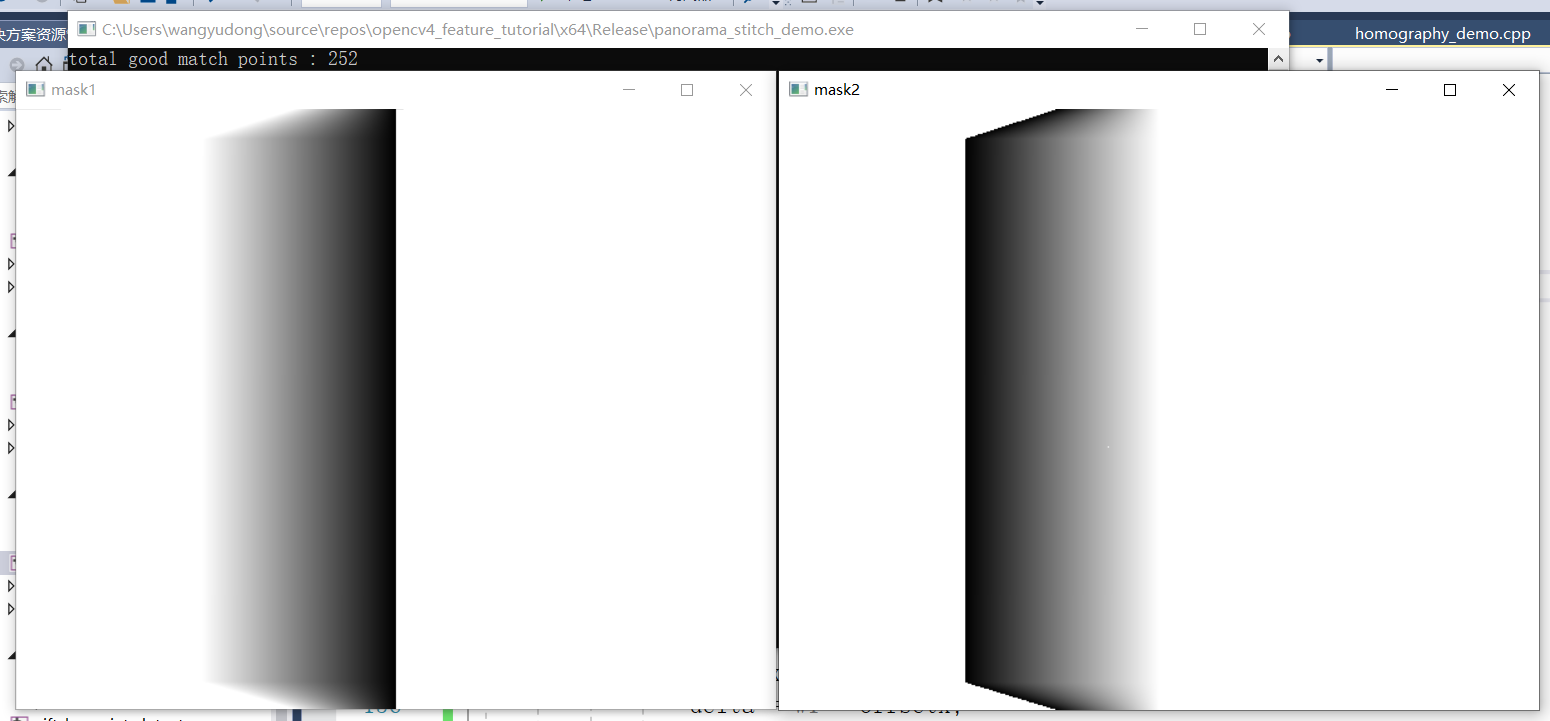

#include <opencv2/opencv.hpp>

#include <iostream>

#define RATIO 0.8

using namespace std;

using namespace cv;

void linspace(Mat& image, float begin, float finish, int number, Mat &mask);

void generate_mask(Mat &img, Mat &mask);

int main(int argc, char** argv) {

Mat left = imread("D:/images/q11.jpg");

Mat right = imread("D:/images/q22.jpg");

if (left.empty() || right.empty()) {

printf("could not load image...\n");

return -1;

}

// 提取特征点与描述子

vector<KeyPoint> keypoints_right, keypoints_left;

Mat descriptors_right, descriptors_left;

auto detector = AKAZE::create();

detector->detectAndCompute(left, Mat(), keypoints_left, descriptors_left);

detector->detectAndCompute(right, Mat(), keypoints_right, descriptors_right);

// 暴力匹配

vector<DMatch> matches;

auto matcher = DescriptorMatcher::create(DescriptorMatcher::BRUTEFORCE);

// 发现匹配

std::vector< std::vector<DMatch> > knn_matches;

matcher->knnMatch(descriptors_left, descriptors_right, knn_matches, 2);

const float ratio_thresh = 0.7f;

std::vector<DMatch> good_matches;

for (size_t i = 0; i < knn_matches.size(); i++)

{

if (knn_matches[i][0].distance < ratio_thresh * knn_matches[i][1].distance)

{

good_matches.push_back(knn_matches[i][0]);

}

}

printf("total good match points : %d\n", good_matches.size());

std::cout << std::endl;

Mat dst;

drawMatches(left, keypoints_left, right, keypoints_right, good_matches, dst);

imshow("output", dst);

imwrite("D:/images/good_matches.png", dst);

//-- Localize the object

std::vector<Point2f> left_pts;

std::vector<Point2f> right_pts;

for (size_t i = 0; i < good_matches.size(); i++)

{

// 收集所有好的匹配点

left_pts.push_back(keypoints_left[good_matches[i].queryIdx].pt);

right_pts.push_back(keypoints_right[good_matches[i].trainIdx].pt);

}

// 配准与对齐,对齐到第一张

Mat H = findHomography(right_pts, left_pts, RANSAC);

// 获取全景图大小

int h = max(left.rows, right.rows);

int w = left.cols + right.cols;

Mat panorama_01 = Mat::zeros(Size(w, h), CV_8UC3);

Rect roi;

roi.x = 0;

roi.y = 0;

roi.width = left.cols;

roi.height = left.rows;

// 获取左侧与右侧对齐图像

left.copyTo(panorama_01(roi));

imwrite("D:/images/panorama_01.png", panorama_01);

Mat panorama_02;

warpPerspective(right, panorama_02, H, Size(w, h));

imwrite("D:/images/panorama_02.png", panorama_02);

// 计算融合重叠区域mask

Mat mask = Mat::zeros(Size(w, h), CV_8UC1);

generate_mask(panorama_02, mask);

// 创建遮罩层并根据mask完成权重初始化

Mat mask1 = Mat::ones(Size(w, h), CV_32FC1);

Mat mask2 = Mat::ones(Size(w, h), CV_32FC1);

// left mask

linspace(mask1, 1, 0, left.cols, mask);

// right mask

linspace(mask2, 0, 1, left.cols, mask);

namedWindow("mask1", WINDOW_FREERATIO);

imshow("mask1", mask1);

namedWindow("mask2", WINDOW_FREERATIO);

imshow("mask2", mask2);

// 左侧融合

Mat m1;

vector<Mat> mv;

mv.push_back(mask1);

mv.push_back(mask1);

mv.push_back(mask1);

merge(mv, m1);

panorama_01.convertTo(panorama_01, CV_32F);

multiply(panorama_01, m1, panorama_01);

// 右侧融合

mv.clear();

mv.push_back(mask2);

mv.push_back(mask2);

mv.push_back(mask2);

Mat m2;

merge(mv, m2);

panorama_02.convertTo(panorama_02, CV_32F);

multiply(panorama_02, m2, panorama_02);

// 合并全景图

Mat panorama;

add(panorama_01, panorama_02, panorama);

panorama.convertTo(panorama, CV_8U);

imwrite("D:/images/panorama.png", panorama);

waitKey(0);

return 0;

}

void generate_mask(Mat &img, Mat &mask) {

int w = img.cols;

int h = img.rows;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

Vec3b p = img.at<Vec3b>(row, col);

int b = p[0];

int g = p[1];

int r = p[2];

if (b == g && g == r && r == 0) {

mask.at<uchar>(row, col) = 255;

}

}

}

imwrite("D:/images/mask.png", mask);

}

// 对mask中的0区域,进行逐行计算每个像素的权重值

void linspace(Mat& image, float begin, float finish, int w1, Mat &mask) {

int offsetx = 0;

float interval = 0;

float delta = 0;

for (int i = 0; i < image.rows; i++) {

offsetx = 0;

interval = 0;

delta = 0;

for (int j = 0; j < image.cols; j++) {

int pv = mask.at<uchar>(i, j);

if (pv == 0 && offsetx == 0) {

offsetx = j;

delta = w1 - offsetx;

interval = (finish - begin) / (delta - 1); // 计算每个像素变化的大小

image.at<float>(i, j) = begin + (j - offsetx)*interval;

}

else if (pv == 0 && offsetx > 0 && (j - offsetx) < delta) {

image.at<float>(i, j) = begin + (j - offsetx)*interval;

}

}

}

}

ORBDetector.h 。

#pragma once

#include <opencv2/opencv.hpp>

class ORBDetector {

public:

ORBDetector(void);

~ORBDetector(void);

void initORB(cv::Mat &refImg);

bool detect_and_analysis(cv::Mat &image, cv::Mat &aligned);

private:

cv::Ptr<cv::ORB> orb = cv::ORB::create(500);

std::vector<cv::KeyPoint> tpl_kps;

cv::Mat tpl_descriptors;

cv::Mat tpl;

};

ORBDetector.cpp 。

#include "ORBDetector.h"

ORBDetector::ORBDetector() {

std::cout << "create orb detector..." << std::endl;

}

ORBDetector::~ORBDetector() {

this->tpl_descriptors.release();

this->tpl_kps.clear();

this->orb.release();

this->tpl.release();

std::cout << "destory instance..." << std::endl;

}

void ORBDetector::initORB(cv::Mat &refImg) {

if (!refImg.empty()) {

cv::Mat tplGray;

cv::cvtColor(refImg, tplGray, cv::COLOR_BGR2GRAY);

orb->detectAndCompute(tplGray, cv::Mat(), this->tpl_kps, this->tpl_descriptors);

tplGray.copyTo(this->tpl);

}

}

bool ORBDetector::detect_and_analysis(cv::Mat &image, cv::Mat &aligned) {

// keypoints and match threshold

float GOOD_MATCH_PERCENT = 0.15f;

bool found = true;

// 处理数据集中每一张数据

cv::Mat img2Gray;

cv::cvtColor(image, img2Gray, cv::COLOR_BGR2GRAY);

std::vector<cv::KeyPoint> img_kps;

cv::Mat img_descriptors;

orb->detectAndCompute(img2Gray, cv::Mat(), img_kps, img_descriptors);

std::vector<cv::DMatch> matches;

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming");

// auto flann_matcher = cv::FlannBasedMatcher(new cv::flann::LshIndexParams(6, 12, 2));

matcher->match(img_descriptors, this->tpl_descriptors, matches, cv::Mat());

// Sort matches by score

std::sort(matches.begin(), matches.end());

// Remove not so good matches

const int numGoodMatches = matches.size() * GOOD_MATCH_PERCENT;

matches.erase(matches.begin() + numGoodMatches, matches.end());

// std::cout << numGoodMatches <<"distance:"<<matches [0].distance<< std::endl;

if (matches[0].distance > 30) {

found = false;

}

// Extract location of good matches

std::vector<cv::Point2f> points1, points2;

for (size_t i = 0; i < matches.size(); i++)

{

points1.push_back(img_kps[matches[i].queryIdx].pt);

points2.push_back(tpl_kps[matches[i].trainIdx].pt);

}

cv::Mat H = findHomography(points1, points2, cv::RANSAC);

cv::Mat im2Reg;

warpPerspective(image, im2Reg, H, tpl.size());

// 逆时针旋转90度

cv::Mat result;

cv::rotate(im2Reg, result, cv::ROTATE_90_COUNTERCLOCKWISE);

result.copyTo(aligned);

return found;

}

object_analysis.cpp 。

#include "ORBDetector.h"

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat refImg = imread("D:/facedb/tiaoma/tpl2.png");

ORBDetector orb_detector;

orb_detector.initORB(refImg);

vector<std::string> files;

glob("D:/facedb/orb_barcode", files);

cv::Mat temp;

for (auto file : files) {

std::cout << file << std::endl;

cv::Mat image = imread(file);

int64 start = getTickCount();

bool OK = orb_detector.detect_and_analysis(image, temp);

double ct = (getTickCount() - start) / getTickFrequency();

printf("decode time: %.5f ms\n", ct * 1000);

std::cout << "标签: " << (OK == true) << std::endl;

imshow("temp", temp);

waitKey(0);

}

}

Net net = readNetFromTensorflow(model, config); // 支持tensorflow

Net net = readNetFromCaffe(config, model); // 支持caffe

Net net = readNetFromONNX(onnxfile);

// 读取数据

Mat image = imread("D:/images/example.png");

Mat blob_img = blobFromImage(image, scalefactor, size, mean, swapRB);

net.setInput(blob_img);

// 推理输出

Mat result = net.forward();

最后此篇关于OpenCV4之特征提取与对象检测的文章就讲到这里了,如果你想了解更多关于OpenCV4之特征提取与对象检测的内容请搜索CFSDN的文章或继续浏览相关文章,希望大家以后支持我的博客! 。

这是一个与 Get OS-Version in WinRT Metro App C# 相关的问题但不是它的重复项。 是否有任何选项可以从 Metro 应用程序检测系统上是否有可用的桌面功能?据我所知,

我想在闹钟响起时做点什么。例如, toast 或设置新闹钟。我正在寻找可以检测闹钟何时响起的东西。首先,我在寻找广播 Action ,但找不到。也许是我的错? 当闹钟响起时,还有其他方法可以做些什么吗

如果某个 JS 添加了一个突变观察者,其他 JS 是否有可能检测、删除、替换或更改该观察者?我担心的是,如果某些 JS 旨在破坏某些 DOM 元素而不被发现,那么 JS 可能想要摆脱任何观察该 DOM

Closed. This question does not meet Stack Overflow guidelines。它当前不接受答案。 想要改善这个问题吗?更新问题,以便将其作为on-topi

有没有办法在您的 Activity/应用程序中(以编程方式)知道用户已通过 USB 将您的手机连接到 PC? 最佳答案 有人建议使用 UMS_CONNECTED自最新版本的 Android 起已弃用

我正在想办法测量速度滚动事件,这将产生某种代表速度的数字(相对于所花费的时间,从滚动点 A 到点 B 的距离)。 我欢迎任何以伪代码形式提出的建议...... 我试图在网上找到有关此问题的信息,但找不

某些 JavaScript 是否可以检测 Skype 是否安装? 我问的原因是我想基于此更改链接的 href:如果未安装 Skype,则显示一个弹出窗口,解释 Skype 是什么以及如何安装它,如果已

我们正在为 OS X 制作一个使用 Quartz Events 移动光标的用户空间设备驱动程序,当游戏(尤其是在窗口模式下运行的游戏)无法正确捕获鼠标指针时,我们遇到了问题(= 将其包含/保留在其窗口

我可以在 Controller 中看到事件 $routeChangeStart,但我不知道如何告诉 Angular 留下来。我需要弹出类似“您要保存、删除还是取消吗?”的信息。如果用户选择取消,则停留

我正在解决一个问题,并且已经花了一些时间。问题陈述:给你一个正整数和负整数的数组。如果索引处的数字 n 为正,则向前移动 n 步。相反,如果为负数(-n),则向后移动 n 步。假设数组的第一个元素向前

我试图建立一个条件,其中 [i] 是 data.length 的值,问题是当有超过 1 个值时一切正常,但当只有 1 个值时,脚本不起作用。 out.href = data[i].hr

这是我的问题,我需要检测图像中的 bolt 和四分之一,我一直在搜索并找到 OpenCV,但据我所知它还没有在 Java 中。你们打算如何解决这个问题? 最佳答案 实际上有一个 OpenCV 的 Ja

是否可以检测 ping? IE。设备 1 ping 设备 2,我想要可以在设备 2 上运行的代码,该代码可以在设备 1 ping 设备时进行检测。 最佳答案 ping 实用程序使用的字面消息(“ICM

我每天多次运行构建脚本。我的感觉是我和我的同事花费了大量时间等待这个脚本执行。现在想知道:我们每天花多少时间等待脚本执行? .我可以对总体平均值感到满意,即使我真的很想拥有每天的数据(例如“上周一我们

我已经完成了对项目的编码,但是当我在客户端中提交了源代码时,就对它进行了测试,然后检测到内存泄漏。我已经在Instruments using Leaks中进行了测试。 我遇到的问题是AVPlayer和

我想我可以用 std.traits.functionAttributes 来做到这一点,但它不支持 static。对于任何类型的可调用对象(包含 opCall 的结构),我如何判断该可调用对象是否使用

我正在使用多核 R 包中的并行和收集函数来并行化简单的矩阵乘法代码。答案是正确的,但并行版本似乎与串行版本花费的时间相同。 我怀疑它仅在一个内核上运行(而不是在我的机器上可用的 8 个内核!)。有没有

我正在尝试在读取 csv 文件时编写一个这样的 if 语句: if row = [] or EOF: do stuff 我在网上搜索过,但找不到任何方法可以做到这一点。帮忙? 最佳答案 wit

我想捕捉一个 onFontSizeChange 事件然后做一些事情(比如重新渲染,因为浏览器已经改变了我的字体大小)。不幸的是,不存在这样的事件,所以我必须找到一种方法来做到这一点。 我见过有人在不可

我有一个使用 Windows 服务的 C# 应用程序,该服务并非始终打开,我希望能够在该服务启动和关闭时发送电子邮件通知。我已经编写了电子邮件脚本,但我似乎无法弄清楚如何检测服务状态更改。 我一直在阅

我是一名优秀的程序员,十分优秀!